Acoubot

Contents

- Abstract

- Introduction

- Functionality specification

- Macro diagram of the system

- Hardware

- Software

- Design

- Validation - the testing program

- Product Operating Instructions

- Summary

- Bibliography

- Appendix

1. Abstract

An Acoubot system serves to enhance speech intelligibility within classrooms. Numerous factors can impede students’ concentration during lectures and their ability to grasp the taught material. One major contributing factor to this issue is the acoustic conditions within classrooms. Typically, classrooms do not conform to recommended acoustic standards. Consequently, students contend with various disturbances originating both externally, such as conversations in adjacent classrooms or hallway noise, and internally, often related to the classroom’s geometry.

Our proposed solution to address acoustic issues in classrooms involves the use of a robot designed to assess its surrounding environment and conduct acoustic measurements. These measurements are then automatically analyzed, allowing users to visualize all acoustic problems within the room via a map. On this map, problematic areas are highlighted, accompanied by suggested solutions aimed at improving speech intelligibility.

2. Introduction

System Overview

The Acoubot system is a user-friendly platform designed to enhance room acoustics without requiring any prior knowledge or expertise in the field. This innovative system automatically detects room parameters and provides users with detailed information regarding acoustic issues and potential solutions. The information is presented on an interactive room map, making it easy for users to understand and implement improvements.

The “AcouBot” system autonomously scans and measures the room, freeing users from the technical aspects of measurement. Instead, users can focus on making acoustic adjustments based on the system’s recommendations and compare the results against predefined benchmarks. The system’s primary objective is to optimize speech intelligibility, ensuring that the identified acoustic problems align with this specific acoustic profile.

Purpose

The Acoubot system serves two primary purposes:

-

Enhancing Room Acoustics: The system’s foremost objective is to enhance the acoustics of a room without requiring the involvement of an acoustic professional.

-

Automating Benchmarking: The second purpose is to automate the entire benchmarking process, typically conducted manually by a professional. This automation empowers users to make room adjustments based on the robot’s calculated recommendations, simplifying the process and reducing the need for specialized expertise.

Scope

In order to achieve project success, we will define clear objectives and delineate the project’s boundaries by discussing what it does not encompass. Our project involves the development of automatic test equipment for acoustic measurements and the identification of solutions that can enhance speech intelligibility in classrooms. Within the vast realm of acoustics, we will specifically address the following aspects:

Room shape

The system is designed to work with rooms that have boxy shapes. Other room shapes would significantly complicate tasks such as navigation, map creation, acoustic problem-solving, and room dimension measurements.

Echo and Room dimensions

Echo, which occurs when the delay between the original sound and reflected sound is less than 1/10 of a second, will not be discussed in this project since it is not typical in classrooms. Additionally, room dimensions and their potential impact on echo will be considered insignificant. For instance, the echo effect begins to manifest when the diagonal length of a room exceeds 17.2 meters, and it becomes significant in rooms larger than 140 square meters.

The classroom floor

The classroom’s floor must be flat and devoid of stairs due to the mechanical limitations of the robot.

Acoustic measurements (Reverberation time)

Reverberation time, a parameter influencing speech intelligibility, will be measured to evaluate the room’s acoustic properties and determine the required amount of acoustic absorbers.

Acoustic measurements (Background noise)

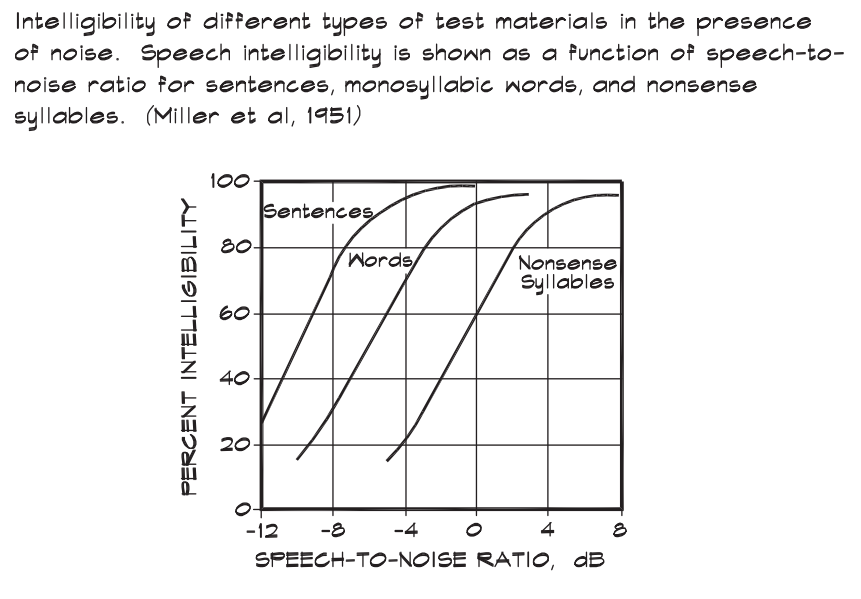

Background noise levels, impacting speech intelligibility positively as signal-to-noise ratio (SNR) increases, will be measured at various points within the classroom. Noise sources will be identified, and steps to reduce noise levels will be recommended.

Sound insulation between rooms

Acoustic insulation between rooms will not be a focal point of the project. Testing sound insulation would require the robot to exit the classroom, potentially complicating navigation and communication.

Dimensions of the room

Measuring the room’s dimensions is essential for understanding the behavior of sound waves within it. This information will be used for calculations related to practical acoustic solutions and robot navigation.

Definitions and Acronyms

| Term | Definition |

|---|---|

| RT_60 | Reverberation time, the decay time of a sound |

| Echo | Acoustic phenomena |

| SI | Speech intelligibility |

| SNR | Signal to Noise Ratio |

| Reflection | Direction change of a wavefront at an interface between two different media so that the wavefront returns into the medium from which it originated |

| Refraction | Refraction is the phenomenon of a wave changing its speed |

| Diffraction | A wave exhibits diffraction when it encounters an obstacle that bends the wave or spreads after emerging from an opening. Diffraction effects are more pronounced when the size of the obstacle or opening is comparable to the wavelength of the wave |

| MU | Mobile Unit |

| SU | Stationary Unit |

| MP | Measurement Point |

| SPI | Speech Transmission Index |

| RPi | Raspberry Pi controller |

| RF | Radio Frequency |

| GPIO | General Purpose Input Output (RPI) |

| dBFS | Decibels relative to full scale |

| ESS | Exponential Sweep Sine |

Theoretical background

Theoretical Problem survey

According to academic research, a significant number of classrooms fall short of standard acoustic requirements. For instance, in 11 active Korean university classrooms, adult students experienced average speech levels of 51.5 dBA, noise levels of 44.3 dBA, and a speech-to-noise ratio of 7.2 dBA. Poor classroom acoustics have a direct adverse impact on speech intelligibility, particularly in university classrooms and labs, and even more so in younger schools.

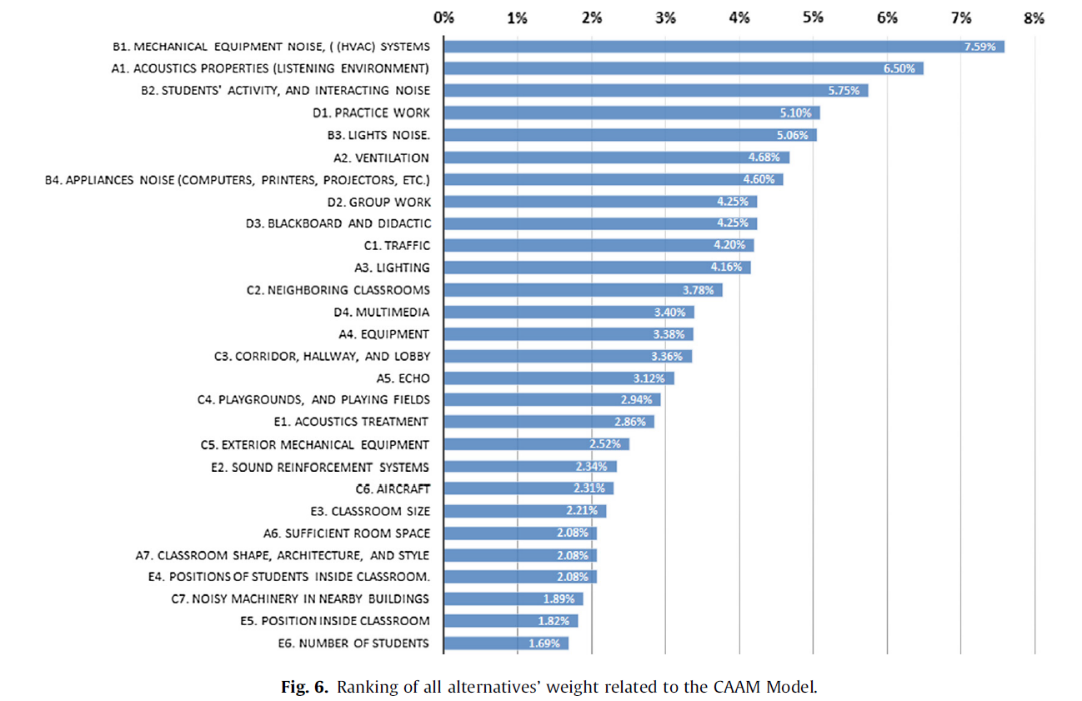

In 2016, an assessment model (CAAM) categorized factors affecting speech intelligibility, extending beyond classroom acoustics to encompass lighting, proper ventilation (temperature-related, not noise-related), and teaching style. Among these parameters, it’s evident that acoustics and noise have the most pronounced influence on speech intelligibility, as indicated by the chart below.

The ANSI S12.60 standard for classroom acoustics addresses both reverberation time and background noise and sets maximum permissible levels for each:

The maximum permitted reverberation time in an unoccupied, furnished classroom is 0.6 seconds for classrooms with a volume under 283 m³. The maximum allowable background noise level is 35 dBA.

Theoretical Solution Survey

To address these issues and enhance speech intelligibility, we will concentrate on the frequency ranges relevant to human speech, particularly consonants, which contain vital articulation information. Our approach involves leveraging principles of Reflection, Refraction, and Diffraction.

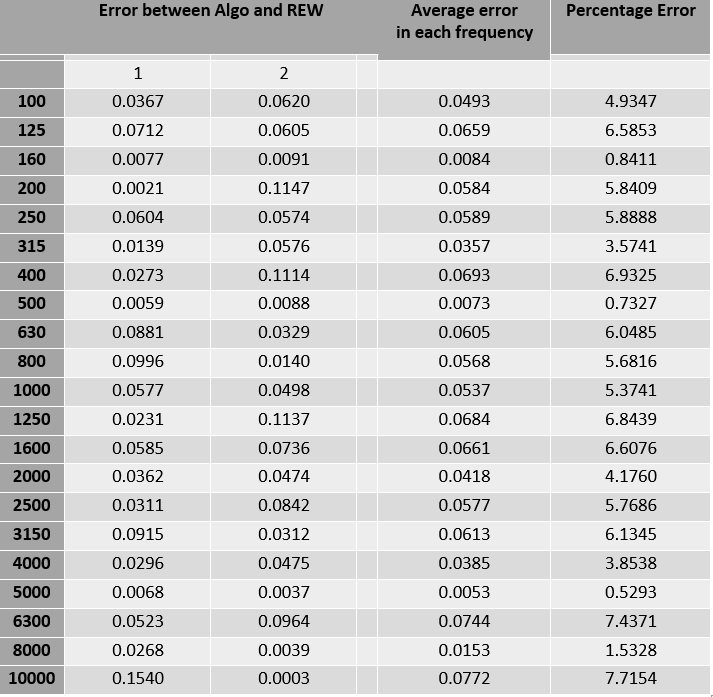

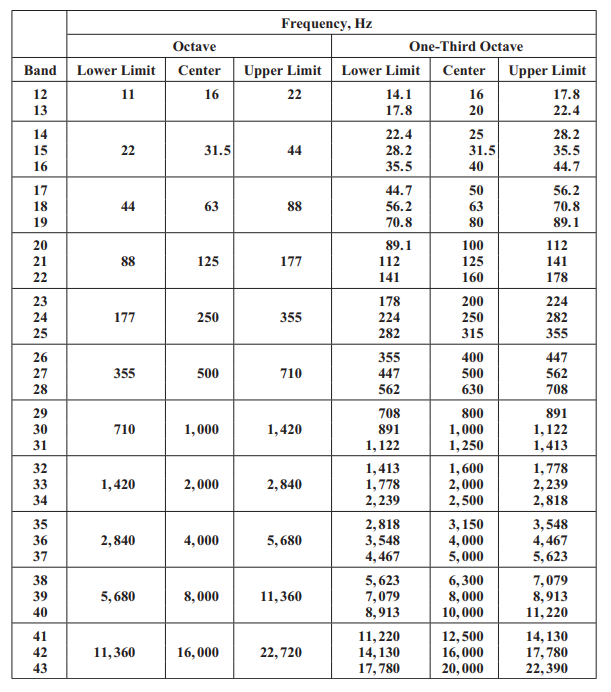

We will isolate the acoustic problem by conducting measurements at multiple points within the room. We will follow the ISO 140 standard to measure noise sources when they are stationary and situated in the lecturer’s position. This will enable us to assess the room’s response to various frequencies and identify those that can impact speech intelligibility. Additionally, we will measure the decay time at different frequencies, determining the room’s reverberation time. Using the Sabine Formula, we can calculate the necessary absorption to achieve the desired reverberation time. The placement of absorbers will be influenced by the room’s shape, and we will analyze frequencies at which standing waves may form.

Background noise within the classroom will also be measured to identify common sources, such as air conditioner motors and external noise. Multiple measurements will be taken throughout the classroom to pinpoint the noise’s location. Our approach combines various acoustic treatment methods to provide the most effective solution for improving speech intelligibility.

Discussion and Conclusion

During our investigation into classroom acoustics, we discovered numerous acoustic phenomena that can impact speech intelligibility. However, we excluded certain phenomena, such as echoing voices caused by hands-free communication, as they are not typical in classrooms due to their smaller size. Standard classroom dimensions are insufficient for these phenomena to manifest, so they were not investigated further.

Technological background

Technological Problem Survey

The project faces several technical challenges that need to be addressed:

-

Automatic Acoustic Measurements: One challenge is the automated execution of acoustic measurements at various points within the room. The issue here lies in determining how to select the appropriate measurement points in relation to the room’s dimensions and size.

-

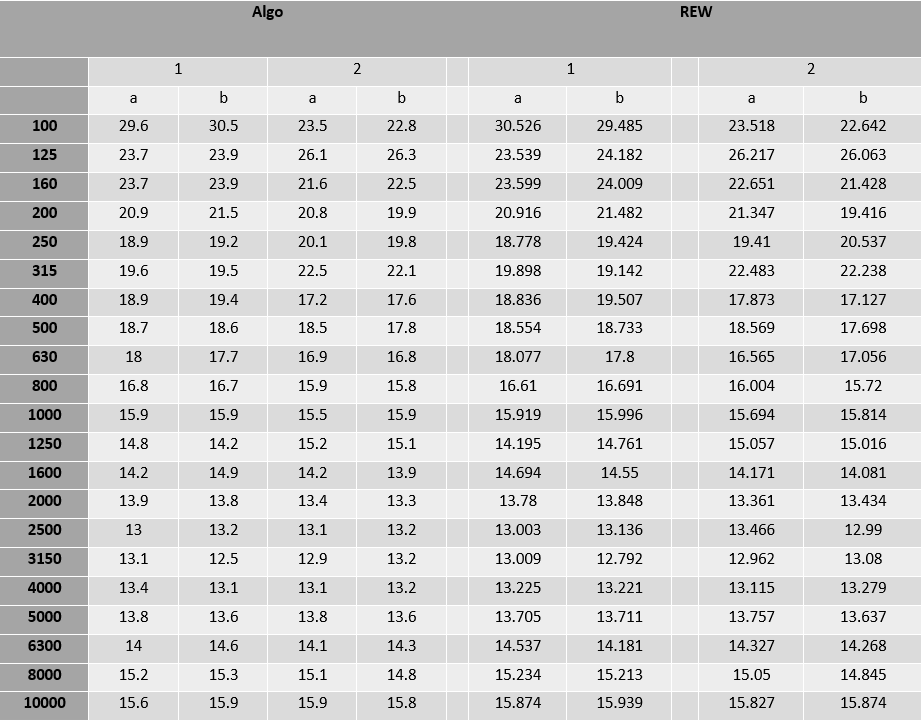

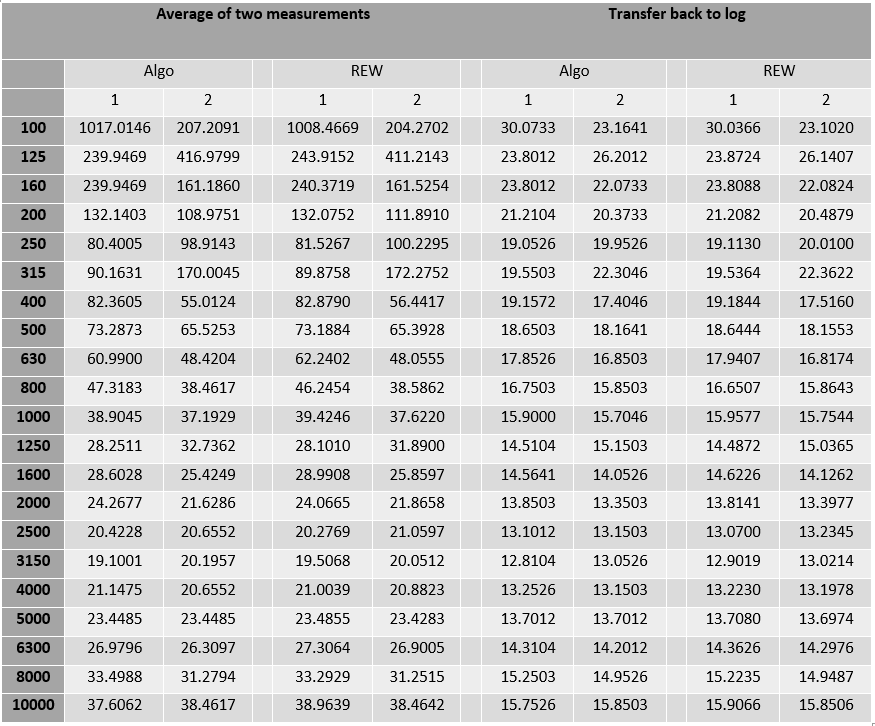

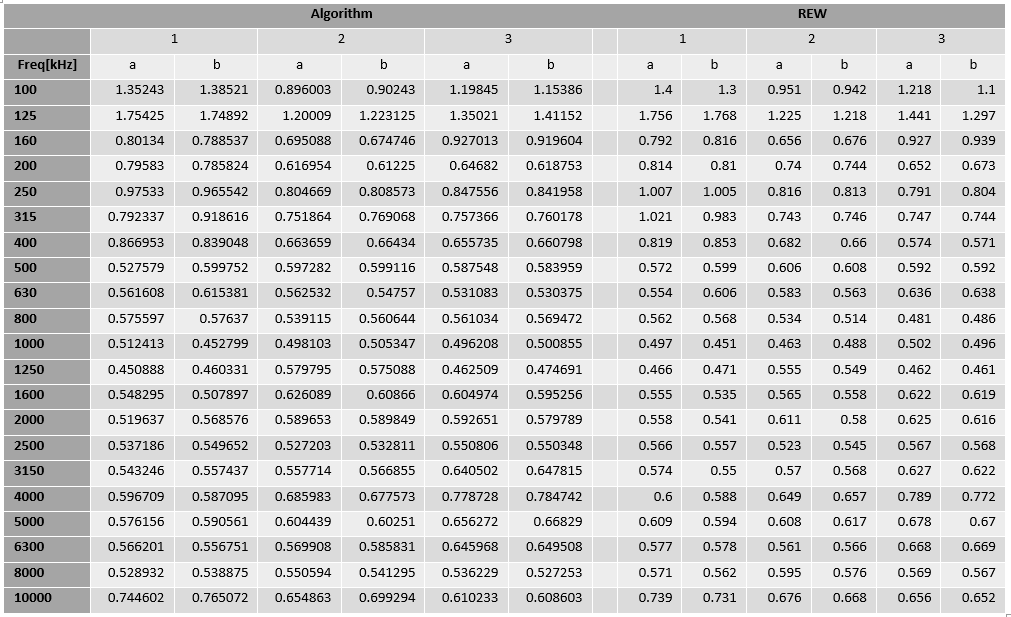

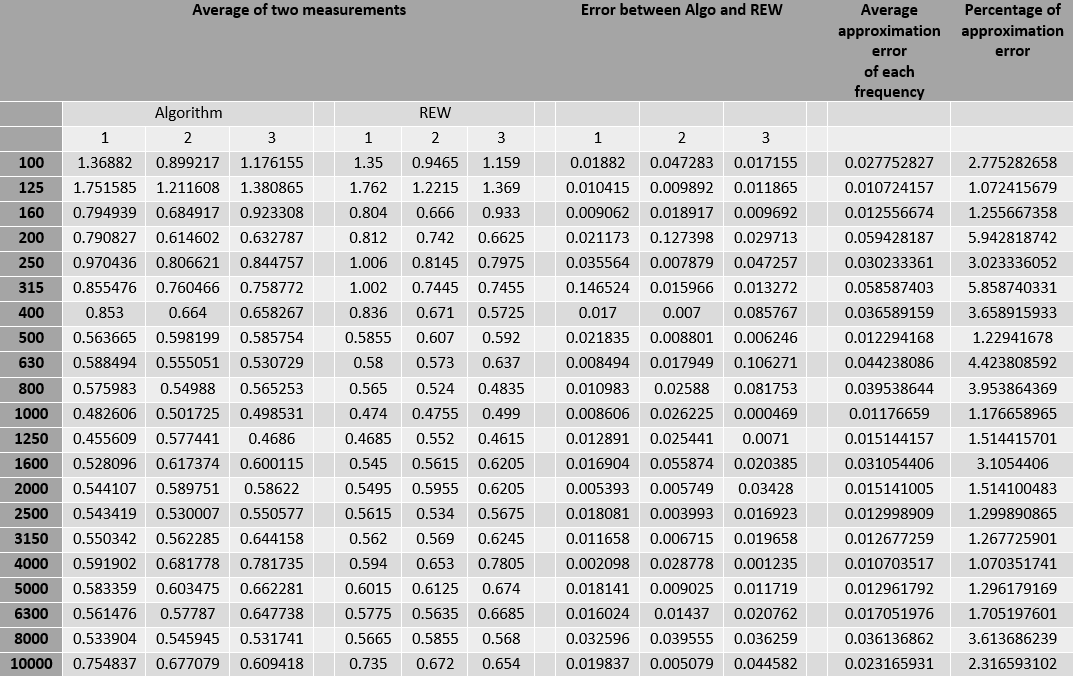

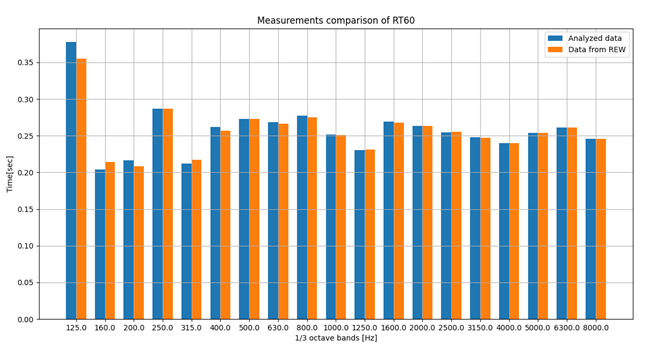

Data Analysis Accuracy: Ensuring precise and reliable analysis of recorded data is crucial. This involves accurately measuring parameters like reverberation time and background noise across all relevant frequency bands. The problem is to devise effective methods for this analysis and to validate the results through a sanity test.

-

Room Mapping: Mapping the room is essential for the algorithms that calculate acoustic solutions. To achieve this, we need accurate measurements of the room’s dimensions, which presents its own set of challenges.

-

Robot Localization: For the robot’s navigation algorithm to function effectively, it must be able to identify its location within the room and determine its angle relative to the map’s axes. This requires solutions for precise localization.

Addressing these technical challenges is critical to the successful execution of the project and the development of an efficient Acoubot system.

Technological Solution Survey

To address the technical challenges encountered in the project, several solutions and tools can be explored:

- Hand-held XL2 Analyzer: The Hand-held XL2 Analyzer is a versatile instrument that serves as a Sound Level Meter, a professional Acoustic Analyzer, a precision Audio Analyzer, and a comprehensive Vibration Meter all in one. It offers various analyzers, including capabilities for measuring RT60 (Reverberation Time), Speech Intelligibility STIPA (additional components may be required), and Noise curves. The data collected can be conveniently stored as a .txt file on an SD card, allowing for further analysis.

- Bedrock Intelligibility Measurement Kit: This kit comprises a Bedrock SM50 analyzer and a Bedrock BTB65 Talkbox Loudspeaker. It is specifically designed for conducting STIPA tests and measuring Noise levels (Pink and White). Notably, the kit plays back signals with automatically calibrated spectrum and levels, eliminating the need for user calibration.

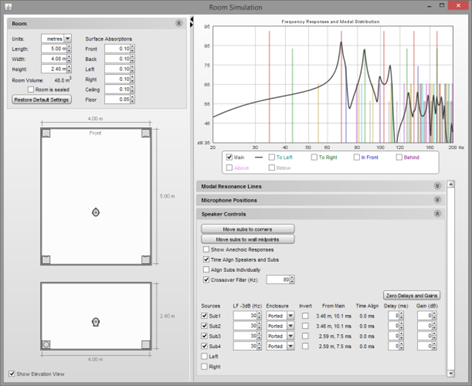

- REW (Room EQ Wizard): REW is a free room acoustics analysis software that facilitates the measurement and analysis of room and loudspeaker responses. It provides tools for displaying equalizer responses and can automatically adjust parametric equalizer settings to counteract the effects of room modes, ensuring that responses align with a target curve.

These solutions offer valuable resources for addressing the technical challenges involved in the project, enabling accurate and efficient acoustic measurements, analysis, and room treatment recommendations.

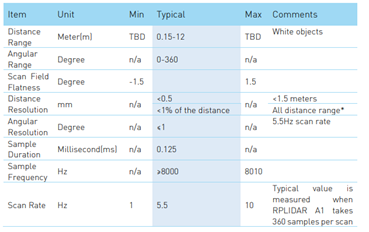

Sensors

-

Microphone: For acoustic measurements, we have opted for the miniDSP UMIK-1 measurement microphone. This microphone offers user-friendly operation and includes complimentary software for conducting measurements, decoding data, and comparing our algorithms. Specifically designed for acoustic testing, it connects via USB, making it exceptionally convenient when working with the Raspberry Pi (RPI). Moreover, it does not require any installations and is tailored for the LINUX operating system.

-

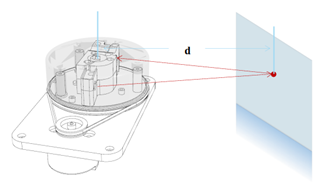

LIDAR:

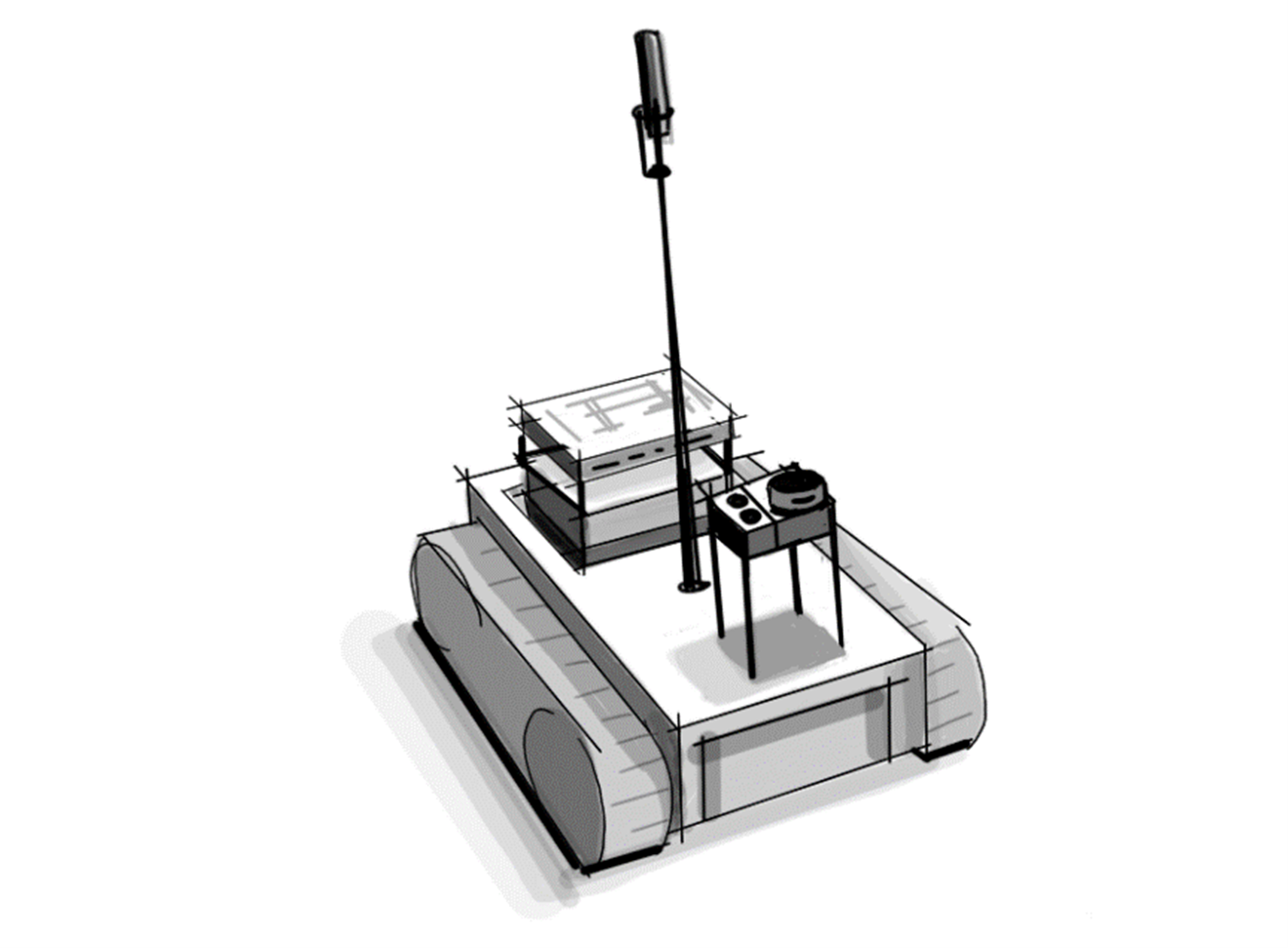

Actuators

The stability of the robot frame is of paramount importance since it supports the microphone, creating an inverted pendulum effect. To enhance stability, we have chosen to use four DC electric wheel motors with a 1:48 gear ratio. These motors are well-suited for robotics and model vehicles, delivering a maximum torque of 800g/cm at a minimum voltage of 3V.

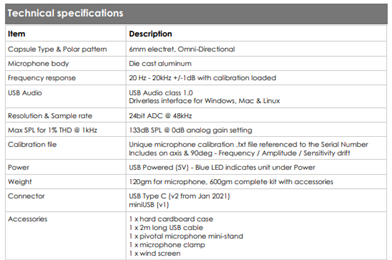

Motor shield HAT

To control the motors and manage them efficiently with high current requirements, we have selected a motor control controller compatible with the RPI. This ready-made module offers several advantages, eliminating the need for excessive wiring. The module connects directly above the controller and interfaces with all its GPIO BUS. Furthermore, it is designed to accommodate additional sensors, such as IR and ultrasonic sensors, with high voltage protections for GPIO connections.

Communication

Efficient communication is crucial for transmitting recording information, which can vary in size from tens to hundreds of megabytes. We considered several options, including Wi-Fi, Bluetooth, and RF networks. Given that the RPI already supports Wi-Fi and Bluetooth interfaces, we have chosen to utilize one of these options for convenience. The selection of Wi-Fi for transferring files is primarily based on the size of the data that needs to be transferred.

Embedded device

The embedded device faces certain constraints:

- Minimum sampling frequency must match the minimum sampling frequency of a sound wave, typically 44.1 kHz for reliable data recovery. In our case, the chosen microphone’s sample rate is 48 kHz.

- Connectivity is critical as we will be connecting multiple sensors to the embedded device. The sensors include LIDAR, ultrasonic, and the microphone, each with specific interface requirements. The microphone and LIDAR interface via USB.

- The system must be capable of storing measurements, necessitating the connection of a memory card.

Language

Python serves as the primary programming language for our project due to its compatibility with most modules used, including the RPI controller. It offers dedicated libraries for sensor data reading and control. Python is well-suited for both Linux and Windows environments, offering an abundance of online resources and examples. Python’s flexibility is advantageous for algorithm development, testing, and debugging. While it may have slightly reduced speed compared to C++, it excels in ease of use and code readability. For tasks involving lengthy for loops, we prefer to use more efficient syntax or linear algebra methods.

3. Functionality specification

The Acoubot system is designed to perform various acoustic measurements and provide acoustic solutions for improving room acoustics. Here are the key functionalities and specifications of the system:

- Room Measurement and Mapping:

- The system measures the dimensions of the room and creates a map.

- It determines where to take acoustic measurements based on the room map.

-

Acoustic Measurements:

- The system calculates the reverberation time in the room.

- It measures background noise levels.

-

Data Transfer:

- All measurements, including acoustics and room dimensions, are transferred to a computer with a dedicated application for further analysis.

-

Acoustic Solutions:

- The application provides acoustic solutions if necessary to enhance room acoustics.

-

Verification Measurements:

- After implementing an acoustic solution, a second measurement can be performed for verification.

-

Room Shape:

- The system is optimized for boxy-shaped rooms.

- It prefers the angle between two nearby walls to be 90 degrees, but it can handle angles within ±5 degrees.

-

Room Size:

- The system can handle rooms with a maximum size of 127 square meters (distance between two far corners of the room not exceeding 16 meters).

-

Ceiling Height:

- The maximum height of the ceiling that the system can work with is 5 meters.

-

Compatibility:

- The system is not compatible with rooms featuring glass doors or walls. These surfaces should be covered when using AcouBot.

-

Device Dimensions:

- The device’s dimensions are 23 cm in length, 13 cm in width, and 110 cm in height.

-

Weight:

- The device weighs 1.8 kilograms.

-

Rechargeable Battery:

- The system is equipped with a rechargeable battery.

- UPS (Uninterruptible Power Supply) provides 2 hours of operation.

- RC (Remote Control) battery offers 5 hours of usage time.

-

Usage Time:

- The system can operate for approximately 4 hours on a single charge.

-

Wi-Fi:

- The system utilizes a 2.4GHz Wi-Fi connection for data transfer and communication.

These specifications define the capabilities and limitations of the Acoubot system, ensuring it is well-suited for measuring and improving room acoustics within the specified parameters.

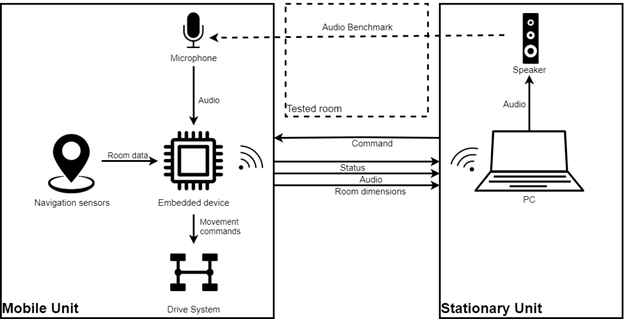

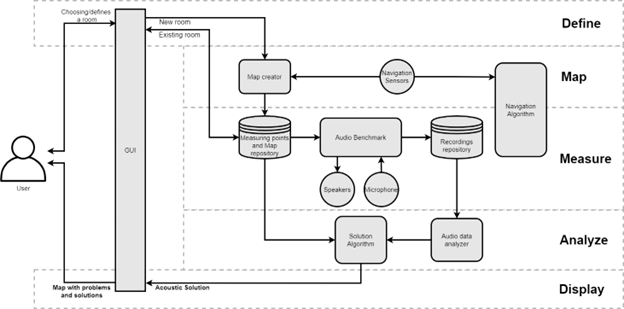

4. Macro diagram of the system

Whole system

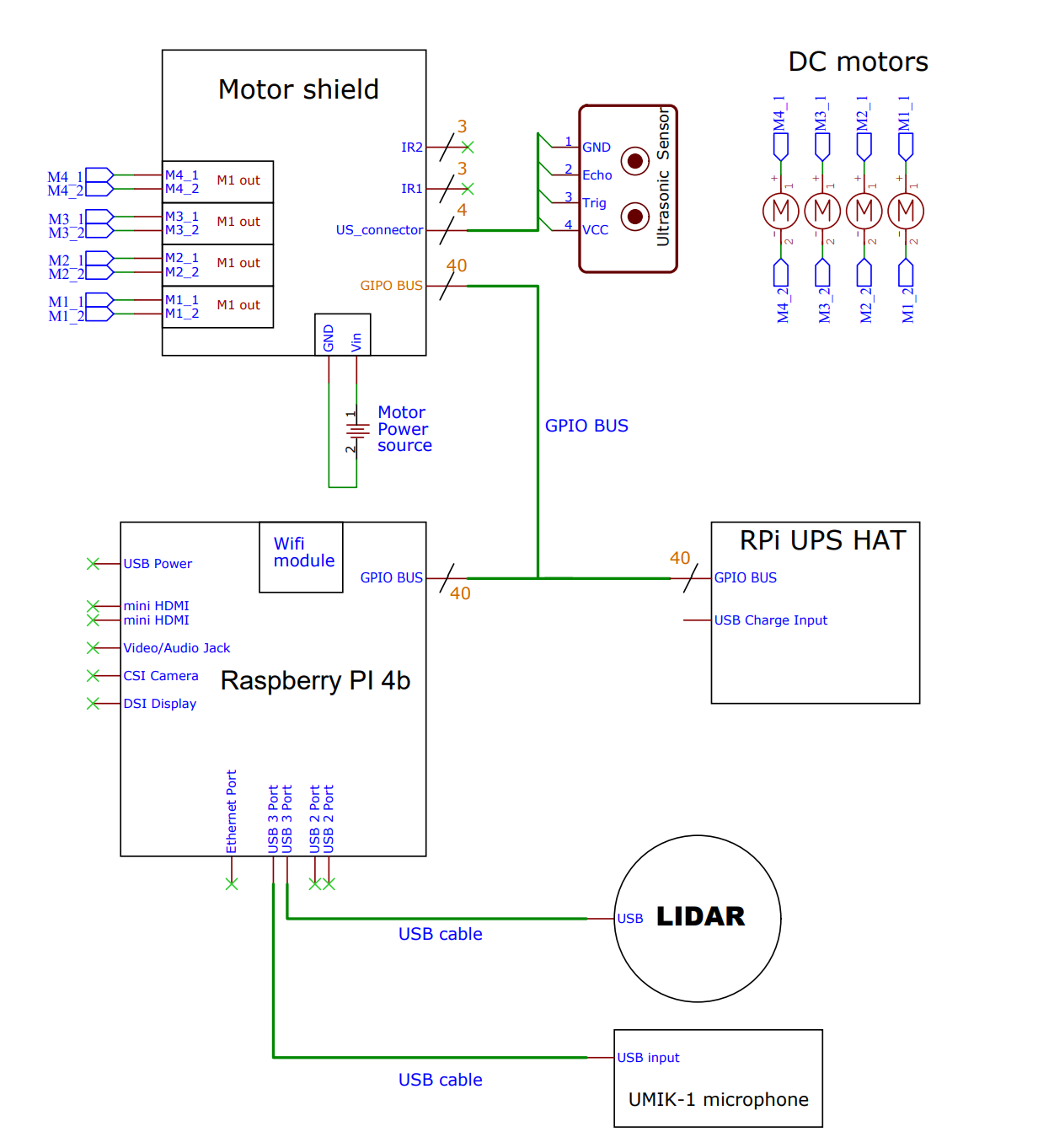

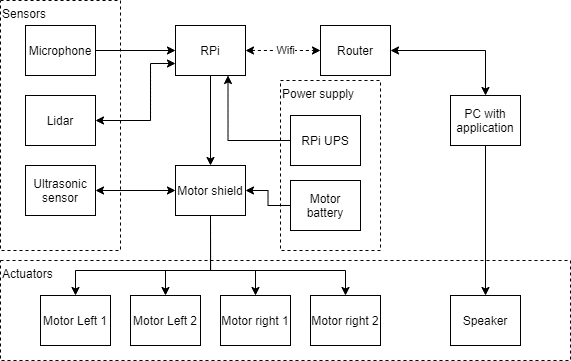

Figure 4-1: Hardware architecture

Figure 4-1: Hardware architecture

Mobile unit

Diagram

Figure 4-2: Mobile unit diagram

Figure 4-2: Mobile unit diagram

Index | Interface | Notes ——|————|————————————————- 1 | USB | 2 | Power, I2C | I2C for reading battery status, RPi power supply 3 | USB | 4 | GPIO | 5 | GPIO | 6 | Power | Motor high current power supply 7 | GPIO | PWM Table 2: Mobile unit interfaces

Electric circuit

Figure 4-3: Mobile unit electric circuit

Figure 4-3: Mobile unit electric circuit

Stationary unit

Figure 4-4: Stationary unit diagram

| Index | Interface | Notes |

|---|---|---|

| 1 | Bluetooth/Wired | Test signal |

| 2 | USB | Communication with robot |

Table 3: Stationary unit interfaces

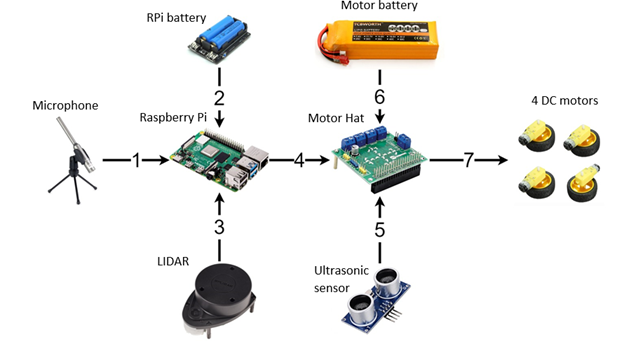

5. Hardware

Block diagram

Figure 5-1: Full block diagram

Controller - Raspberry Pi 4b

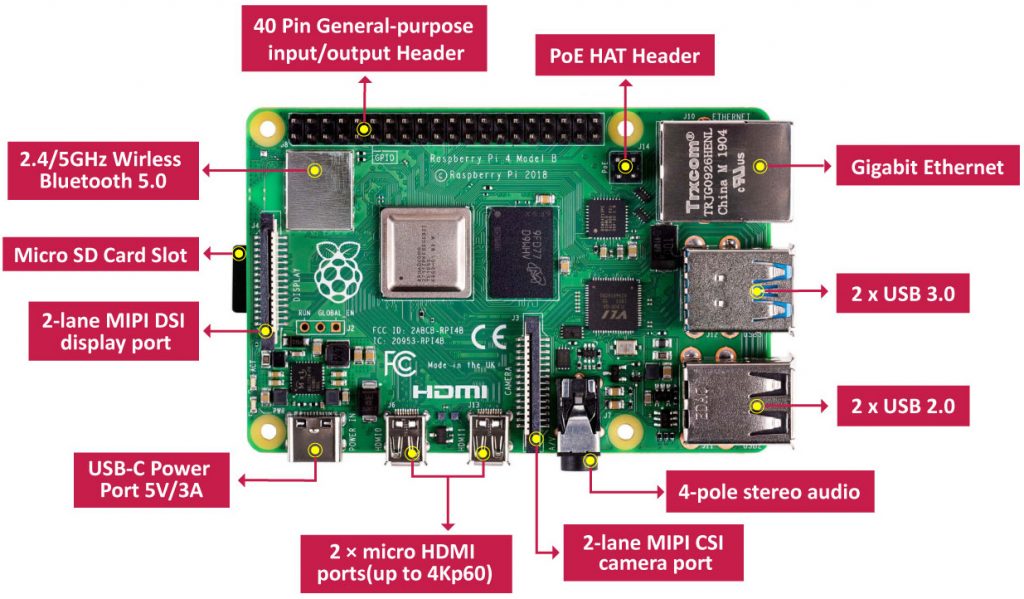

The core of our mobile unit is the Raspberry Pi 4b. This versatile single-board computer serves as the central processing unit for our system, orchestrating the operation of sensors and actuators while also providing onboard Wi-Fi connectivity. With an embedded Python interpreter, the Raspberry Pi seamlessly integrates into our system’s architecture. Here are some key details about the Raspberry Pi 4b:

Figure 5-2:RPi model 4b

-

CPU: The Raspberry Pi 4b is powered by a Broadcom BCM2711 CPU. Its micro-architecture, ARM Cortex-A72, is commonly found in popular smartphone CPUs, ensuring robust performance.

-

RAM: The Raspberry Pi 4b is equipped with 2 GB of RAM, providing sufficient memory for our system’s computational needs.

-

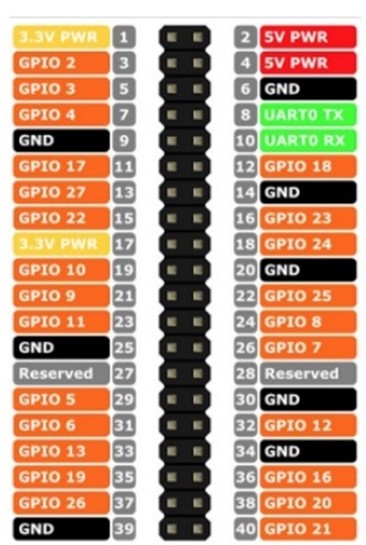

General-Purpose Input-Output (GPIO): The GPIO interface consists of a 40-pin pinout that allows us to connect various sensors and actuators, including LEDs and motors. These I/O pins are digital in nature, so analog sensors require Analog-to-Digital Conversion (ADC) for compatibility.

-

Modularity: One of the standout features of the GPIO interface for our project is its support for modular components that can be easily attached, much like building blocks. These modules are pre-designed circuits equipped with libraries of code, enabling us to activate their specific features by implementing pre-made functions.

The Raspberry Pi 4b’s combination of processing power, memory, GPIO flexibility, and modularity makes it an ideal choice for the core of our mobile unit, facilitating seamless integration and control of various sensors and actuators in our Acoubot system.

Figure 5-4:GPIO

Sensors

LIDAR

Figure 5-5:Lidar work concept

The selection of the LIDAR sensor, specifically the RPLIDAR, was driven by its capability to provide the Mobile Unit (MU) with essential functionalities, including self-localization for indoor navigation and the acquisition of room dimension data for acoustic measurements.

Here are some key details about the RPLIDAR sensor:

-

Laser Triangulation Principle: The RPLIDAR sensor is designed based on the laser triangulation principle. It employs a high-speed vision acquisition and processing hardware developed by SLAMTEC to precisely measure distances.

-

High-Speed Data Acquisition: The RPLIDAR system is capable of measuring distance data at a rate exceeding 8000 times per second. This high-speed data acquisition ensures accurate and real-time distance measurements.

-

High-Resolution Output: The sensor provides high-resolution distance output with an impressive level of precision, offering distance values with an accuracy of less than 1% of the measured distance.

-

Infrared Laser Emission: RPLIDAR emits a modulated infrared laser signal, which is projected onto the objects in its vicinity. The laser signal is then reflected by these objects.

-

Vision Acquisition and Processing: The vision acquisition system within the RPLIDAR samples the returning laser signal. The DSP (Digital Signal Processor) embedded in the RPLIDAR sensor processes this sampled data. The result is the output of distance values and angle values between the objects and the RPLIDAR sensor through a communication interface.

-

360-Degree Scanning: To achieve comprehensive environmental awareness, the RPLIDAR sensor is mounted on a spinning rotator equipped with a built-in angular encoding system. During rotation, the sensor performs a 360-degree scan of the current environment.

Overall, the RPLIDAR sensor’s ability to rapidly and accurately measure distances, combined with its 360-degree scanning capability, makes it an invaluable component in our Acoubot system, enabling self-localization and room dimension data acquisition for acoustic measurements.

Figure 5-6: Lidar specification

Ultrasonic

Figure 5-7: Ultrasonic sensor

The ultrasonic sensor serves a crucial role in detecting the height of the room as part of the room dimension data. Specifically, we use the HC-SR04 ultrasonic sensor, which relies on SONAR (Sound Navigation and Ranging) principles to accurately measure distances. Here are the key features and details of the HC-SR04 ultrasonic sensor:

-

Non-contact Range Detection: The HC-SR04 ultrasonic sensor excels at non-contact range detection. It provides highly accurate and stable distance measurements without the need for physical contact with objects or surfaces.

-

Wide Measurement Range: This sensor is capable of measuring distances ranging from 2 centimeters to 400 centimeters (approximately 1 inch to 13 feet). Its versatility makes it suitable for a wide range of applications.

-

Robust Performance: The HC-SR04’s operation remains unaffected by external factors such as sunlight or the color of objects. Unlike some other sensors, it can reliably operate in various lighting conditions, including outdoors.

-

Ultrasonic Transmitter and Receiver: The sensor is equipped with both an ultrasonic transmitter and receiver module. These components play vital roles in its functionality.

-

Transducers: On the front of the ultrasonic range finder, there are two metal cylinders known as transducers. Transducers are responsible for converting mechanical forces into electrical signals. In the case of the HC-SR04, there are two types of transducers: the transmitting transducer and the receiving transducer.

-

Transmitting Transducer: The transmitting transducer converts an electrical signal into an ultrasonic pulse. This pulse is emitted into the environment and travels until it encounters an object.

-

Receiving Transducer: The receiving transducer detects the reflected ultrasonic pulse when it returns after bouncing off an object. It converts this reflected pulse back into an electrical signal.

The HC-SR04 ultrasonic sensor’s combination of accurate range detection, wide measurement range, and robust performance makes it an excellent choice for measuring room height in our Acoubot system. It plays a vital role in gathering essential room dimension data for our acoustic measurements.

UMIK-1 microphone

Figure 5-8: Microphone specifications

The UMIK-1 microphone is a crucial component for acoustic measurements in our project due to its specialized design for this purpose. Here are the key features and details about the UMIK-1 microphone:

-

Purpose-Built for Acoustic Measurement: The UMIK-1 microphone is specifically designed for acoustic measurements, making it an ideal choice for our project’s acoustic data collection needs.

-

Omni-Directional Pickup Pattern: This microphone features an omni-directional (or nondirectional) pickup pattern. In ideal conditions, an omni-directional microphone captures sound evenly from all directions, forming a spherical response pattern in three dimensions.

-

Low Noise: The UMIK-1 microphone is engineered to provide low noise, ensuring that the recorded acoustic data is clean and accurate.

-

Plug & Play: It offers a plug-and-play setup, simplifying the integration of the microphone into our system without the need for complex configurations or driver installations.

-

Frequency-Dependent Polar Pattern: While an omnidirectional microphone theoretically has a perfect spherical response, in reality, the microphone’s body size can affect its polar pattern, especially at higher frequencies. The polar response may exhibit slight flattening due to the microphone’s dimensions, which can impact sounds arriving from the rear. This effect becomes more pronounced as the diameter of the microphone approaches the wavelength of the specific frequency in question.

-

Miniature Diameter: To mitigate the flattening of the polar response, smaller-diameter microphones are preferred for achieving the best omnidirectional characteristics, particularly at higher frequencies.

In summary, the UMIK-1 microphone’s specialized design, omni-directional pickup pattern, low noise, and ease of use make it an excellent choice for acoustic measurements in our project. It ensures accurate and reliable data collection for assessing room acoustics and implementing acoustic solutions.

Actuators

DC motors

In our system, we employ standard dual-shaft geared DC motors, utilizing a total of four motors with one dedicated to each wheel. Initially, we experimented with a robot design featuring tracks for smoother pivoting. However, this design proved to be high-maintenance. We subsequently transitioned to a wheel-based design, which still allowed for sufficiently smooth pivoting.

Motor shield module

The Motor Shield Module serves as a crucial component in our system, acting as an extension or “hat” for the Raspberry Pi. This module facilitates the control of up to four separate DC motors. It achieves this through the inclusion of two Dual H-bridge IC L293D chips, which function as the motor controllers. Each of these chips can independently control two separate motors.

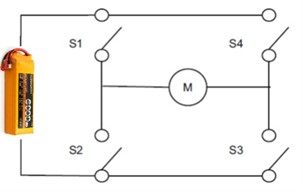

H-Bridge Circuit:

To understand the functionality of the Motor Shield Module, it’s essential to grasp the concept of an H-Bridge circuit. An H-Bridge circuit consists of four switches arranged in an “H” shape configuration, with the motor positioned at the center. This arrangement allows for the control of the motor’s spinning direction by changing the polarity of its input voltage.

Here’s how it works:

Closing two specific switches simultaneously in the H-Bridge circuit reverses the polarity of the voltage applied to the motor, causing a change in the spinning direction of the motor.

For example:

Closing switches S1 and S3 will turn the motor on in a particular direction.

Closing switches S4 and S2 will turn the motor on in the opposite direction.

The Motor Shield Module effectively manages the operation of the DC motors in our system, providing the necessary control for movement and direction. This component plays a crucial role in ensuring the mobility and navigation of the Acoubot within the room.

Energy

The power supply strategy for the mobile unit (MU) in the Acoubot system involves the management of two distinct power-consuming groups:

-

Group 1: This group includes critical components like the Raspberry Pi, LIDAR, Ultrasonic sensor, microphone, and Motorshield HAT (excluding V_s, which powers the L293DD chip).

-

Group 2: This group is responsible for powering the four motors that drive the wheels.

The power consumption for each component in Group 1 was calculated based on information available in their respective datasheets. However, since the microphone lacked power consumption details, an estimation was made by referring to datasheets of similar microphones within the same category.

To predict the usage time of the MU’s power supply, Peukert’s law was utilized. Peukert’s law is an equation that relates the discharge time of a battery to its capacity, discharge current, and a constant known as the Peukert constant (k). In this case, a Peukert constant of 1 was assumed, indicating an ideal battery.

Here is the formula used for calculating usage time (t) with Peukert’s law:

\[\Large t = H \cdot (\frac{C}{I})^k\]Where:

t - is the actual time to discharge the battery (in hours).

H - is the rated discharge time (in hours).

C - is the rated capacity at that discharge rate (in milliampere-hours).

I - is the actual discharge current (in milliamperes).

k - is the Peukert constant (dimensionless).

With a Peukert constant of 1, the calculated usage time for the power supply was approximately 4 hours.

The power supply employs a battery with a capacity of 6700 milliampere-hours (mAh). The maximum current tolerance for the batteries is 6.8A under a 3.7V nominal voltage, which corresponds to a maximum power of 25 watts. However, the actual maximum power after DC-DC conversion is approximately 20-22.6 watts.

It is worth noting that the division of the power supply into two groups is primarily due to the power requirements of the motors, which can exceed the capacity of the primary power source. To address this, an additional battery with a voltage of 11.1V and a capacity of 6000mAh is employed to power the motors.

In summary, the power supply strategy for the Acoubot MU takes into account power consumption calculations, Peukert’s law, and the use of separate batteries to ensure stable and reliable operation, even under demanding conditions.

| device | current[mA] |

|---|---|

| RP | 1200 |

| lidar scanner | 300 |

| lidar motor | 100 |

| mic | 50 |

| ultrasonic | 15 |

| motorshield | 50 |

| total | 1715 |

UPS capacity is 6700[mAh] so:

\[t = \frac{C}{I} = \frac{6700}{1715} ≅ 4[Hours]\]The detailed explanation provided clarifies the power considerations for the Acoubot system’s mobile unit (MU) and the use of separate batteries for different power-consuming groups. Here’s a summary of the key points:

-

Battery Tolerance and Maximum Power: The batteries used in the system can tolerate a maximum current of 6.8A under a 3.7V nominal voltage, resulting in a maximum power capacity of 25 watts.

-

Total Power Usage: The combined power consumption of all components in the system, as indicated in the table, is approximately 8 watts.

-

DC-DC Conversion Efficiency: The UPS employs 3.7V batteries that are converted to 5V. The conversion process is estimated to have an efficiency range of 80% to 90%. This results in an actual maximum power output of 20-22.6 watts.

-

Split Power Supply Groups: The decision to divide the power supply into two groups is primarily driven by the power demands of the Motorshield’s L293DD chip. The V_s supplied to the motors starts with 600mA to each motor, and at peak usage, it can exceed 1.2A. Since all four motors are activated when the MU moves, this chip places a substantial power demand on the system.

-

Minimal Power Usage: The minimal power usage, considering all four motors running at 600mA each (4 motors x 600mA x 5V), in addition to the 8 watts consumed by the rest of the system, would be at least 20 watts. Such high power demand would potentially destabilize the system even under minimal stress.

-

Additional Battery for Motors: To ensure stable operation under maximum stress, an 11.1V, 6000mAh battery is introduced to power the MU’s motors independently. This battery configuration aligns with the project’s objective of enabling the MU to move and function seamlessly under demanding conditions.

-

Usage Time Calculation: The usage time of the additional TCB battery, which powers the motors, is estimated to be approximately 1.25 hours under continuous maximum stress. However, it’s important to note that the motors are not continuously active, and the MU’s movements are intermittent, which would extend the overall usage time.

This power management approach effectively addresses the varying power requirements of different components in the system and ensures stable and reliable operation under a range of conditions, including maximum stress scenarios.

Uninterruptible Power Supply (UPS) module

The UPS (Uninterruptible Power Supply) module in the Acoubot system plays a crucial role in providing stable power to all components of the mobile unit, except for the motors of the wheels. Here are some key details about the UPS module:

-

Power Supply Stability: The UPS module ensures a consistent and uninterrupted power supply to all components of the mobile unit. This stable power supply is essential for the proper functioning of sensitive electronics and sensors.

-

Battery Capacity: The UPS module is equipped with two lithium batteries with a combined capacity of 6700 milliampere-hours (mAh). This capacity allows the system to operate continuously for approximately four hours before requiring a recharge.

-

Recharge Port: The module board of the UPS includes a USB Type-C port for recharging the batteries. This convenient port allows for easy recharging when the batteries are depleted.

-

Duration of Operation: With a total battery capacity of 6700mAh, the UPS module can provide power to the mobile unit for up to four hours under normal operating conditions. This duration ensures that the system can perform its tasks effectively without the risk of sudden power interruptions.

Overall, the UPS module enhances the reliability and performance of the mobile unit by ensuring a stable power supply to its components, allowing it to function optimally for extended periods before requiring a recharge.

Motor Power supply - Vs

The motor driver for the Acoubot system utilizes a separate power supply specifically for the L293DD chip. Here’s how the power supply is distributed:

-

UPS Powers H-Bridge Switches: The UPS module provides power to the switches (S1-S4) of the H-bridge circuit. These switches are crucial for controlling the direction and operation of the DC motors in the mobile unit.

-

Additional Power Supply for DC Motors: In addition to the power from the UPS, an additional power supply is dedicated to powering the DC motors. This secondary power supply ensures that the DC motors receive the necessary voltage and current when a pair of switches (S1-S4) in the H-bridge circuit are closed.

The division of power supply sources in this manner allows for precise control over the DC motors’ operation. The UPS module ensures that the control switches operate smoothly, while the dedicated power supply for the motors guarantees that they receive the required power for movement and operation.

This configuration enhances the overall stability and reliability of the mobile unit’s motor control system, allowing for precise and efficient maneuverability during operation.

| Specification | Value |

|---|---|

| Brand | TCBWORTH |

| Capacity | 6000mAH |

| Continuous Discharge Rate | 30C |

| Burst Rate | 60C |

| Voltage Per Cell | 3.7V |

| Max Voltage Per Cell | 4.2V |

| Voltage Per Pack | 11.1V |

| Max Voltage Per Pack | 12.6V |

| Suggest Charge Rate | 1-5C |

| Plug | XT60 |

Portable mini-Router

To ensure stable communication between the mobile unit (MU) and stationary unit (SU) in the Acoubot system, a wireless LAN (WLAN) setup was implemented. Here are the key details regarding the communication setup:

-

Direct WLAN Mode: Initially, attempts were made to establish a direct WLAN connection between the MU and SU, allowing them to communicate without the need for an access point. However, this approach proved to be complex and challenging to establish a reliable connection.

-

Portable Mini Router (GL-MT300N-V2): To simplify and stabilize the communication, a portable mini router, the GL-MT300N-V2, was introduced into the system. This router supports the IEEE 802.11N wireless protocol, operating at a speed of 300Mbps using the 2.4GHz frequency band.

-

Infrastructure Mode: The mini router is configured to operate in infrastructure mode. In this mode, it serves as the central WLAN router within the network. All wireless devices, including the MU and SU, communicate through this central router, providing a more structured and reliable network framework.

-

Compact Design and Power Supply: The mini router’s compact packaging (58 x 58 x 25mm) makes it easy to integrate into the system. It is powered by a 1A (5V) power supply through a micro-USB port, which can be connected to a laptop or suitable power source.

-

Web Configuration: The mini router comes with a web-based configuration interface that can be accessed through any web browser. Its default IP address is 192.168.1.1, and it supports the Dynamic Host Configuration Protocol (DHCP), allowing devices connected to the router to receive IP addresses automatically.

-

Static IP Address for MU: To function as a server, the MU is assigned a static IP address, specifically configured in the router’s web interface. The MU’s static IP address is set to 192.168.1.200, ensuring consistent and predictable communication within the network.

By implementing this wireless communication setup with the portable mini router, the Acoubot system achieves stable and reliable connectivity between the MU and SU, facilitating data transfer and control between the two units effectively.

6. Software

Background

In our four years of study, we’ve acquired proficiency in three programming languages: C, C++, and Python. When it came to developing the software for this project, our primary goal was to create a program that could be easily coded, upgraded, and maintained. We needed a programming language that could seamlessly interface with the various components of the Mobile Unit (MU) and could be used across different operating systems. Python emerged as the ideal choice for meeting these requirements, as it is fully compatible with both Linux on the Raspberry Pi and Windows.

Python’s extensive support for modules and sensors, along with the availability of libraries and code examples, made it a user-friendly choice. These resources simplified the process of self-learning for each module and sensor, facilitating our understanding and utilization of these components. By coding in Python, we were able to focus more on developing the core algorithms of the project and less on creating I/O functions from scratch. While there was still a substantial amount of work involved in processing raw sensor data into usable, analyzable data, Python’s flexibility and robust libraries helped streamline the development process.

Figure 6-1: Software architecture diagram

Software improvement

While the project is currently in the prototype phase, there are several opportunities for software improvement that can lead to more robust solutions and reduced testing time. In terms of acoustic solutions, gathering a larger dataset from various locations could enable the exploration of machine learning algorithms to achieve more accurate results.

Additionally, the navigation process could be enhanced by incorporating additional sensors, such as an accelerometer, to reduce the number of scans required and potentially complete the entire process in a single scan. This would also aid in correcting cumulative errors in the SLAM (Simultaneous Localization and Mapping) algorithm, further improving the system’s overall performance.

Sensor reading

Microphone

When reading data from the microphone, the system utilizes the “Recording.py” module, which relies on two libraries:

“Pyaudio”: This library is used to obtain a continuous stream of audio data from the microphone. It allows the system to collect audio data from the USB microphone in a straightforward loop.

“Wave”: This library is employed to store the collected data in Wave format on the Raspberry Pi’s memory card.

The configuration for reading data from the UMIK-01 microphone is based on the microphone’s datasheet. The mobile unit employs the measure function, which records and saves the acoustic measurements in a Wave file format.

# Wave configuration

audio = pyaudio.paInt24 # 24-bit resolution

chans = 1 # 1 channel

chunck = 8192 # 2^13 samples for buffer

sampl_rate = 48000 # 48 KHz sampling rate

dev_index = 1 # Device index

def measure(recort_time, wav_name) # The `measure` function

Lidar

The Acoubot system reads information from the Lidar sensor by utilizing the RPlidar library. This library is developed by the company that produces the Lidar sensor and is well-suited for interfacing with the specific Lidar model used in the project.

from breezyslam.sensors import RPLidar as LaserModel

from breezyslam.algorithms import RMHC_SLAM

The Lidar sensor connects to the system via USB, and scans are performed using the Lidar.iter_scans() function.

To further process and work with the raw Lidar data, the system also employs the breezyslam libraries. Specifically, it uses the RPLidar sensor model from breezyslam.sensors and the RMHC_SLAM algorithm from breezyslam.algorithms. These libraries facilitate real-time data processing and SLAM (Simultaneous Localization and Mapping).

Additionally, the Lidar sensor receives commands for specific actions, such as stopping the DC motor during recordings to minimize noise interference. Proper disconnection commands are also crucial to ensure that the sensor can be used for a second scan without encountering exceptions or issues.

Ultrasonic

The Acoubot system uses an ultrasonic sensor to measure the height of the ceiling. To maximize accuracy, the system employs a function that takes multiple height measurements of the ceiling. These measurements are averaged to obtain a more precise estimate of the ceiling height.

During the navigation process in the room, height measurements are taken at different locations. The system then averages these measurements to further enhance accuracy. The only input parameter required for this function is the height of the sensor from the floor. This parameter allows for sensor calibration in case there are any hardware changes or adjustments.

As a result, the output of this function provides the height of the ceiling in meters, which is crucial information for the Acoubot system’s acoustic measurements and calculations.

def measure_height(height_offset):

"""

function for measuring height of the room using Ultrasonic sensor

:param height_offset: offset from the floor

:return: averaged distance in meter

"""

$N$ - number of measurements

$V_{sound}[\frac{m}{s}]$ - speed of sound in air

$time_{echo} [Sec]$ - echo time

$time_{trig} [Sec]$ - trigger time

$h_{offset} [m]$ - offset from the floor

$H_{avg} [m]$ - average height of ceiling

Signal processing

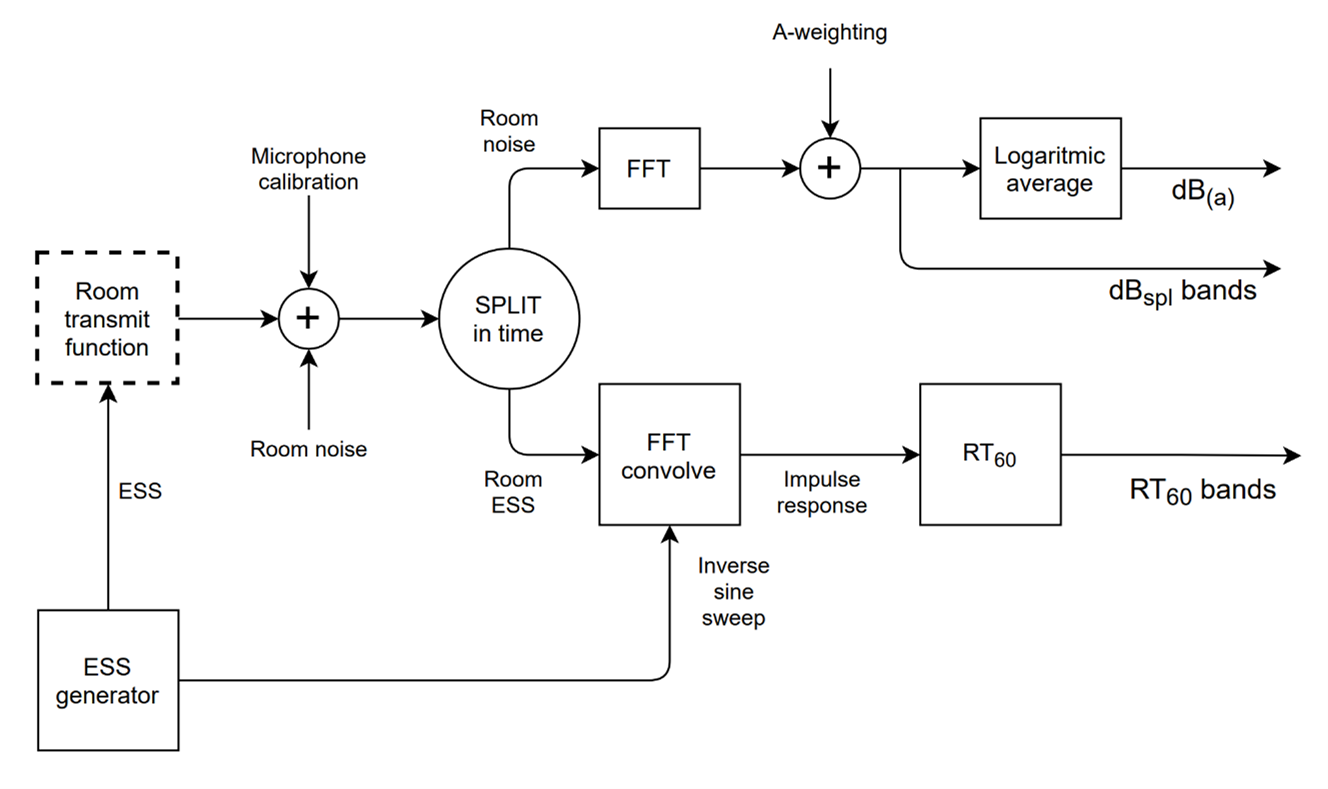

Audio analyzer module

The Audio Analyzer module in the Acoubot system serves the purpose of determining the Transmit function of a room. This is achieved by broadcasting an Exponential Sweep Sine (ESS) signal into the air and recording it using a dedicated microphone. When the system records this signal, it captures it with 24-bit quantization, which provides high-quality and detailed audio data for analysis and processing. This recorded data will be crucial for understanding the acoustic properties of the room and making necessary adjustments to improve its acoustics.

Figure 6-3: Audio processing

Figure 6-3: Audio processing

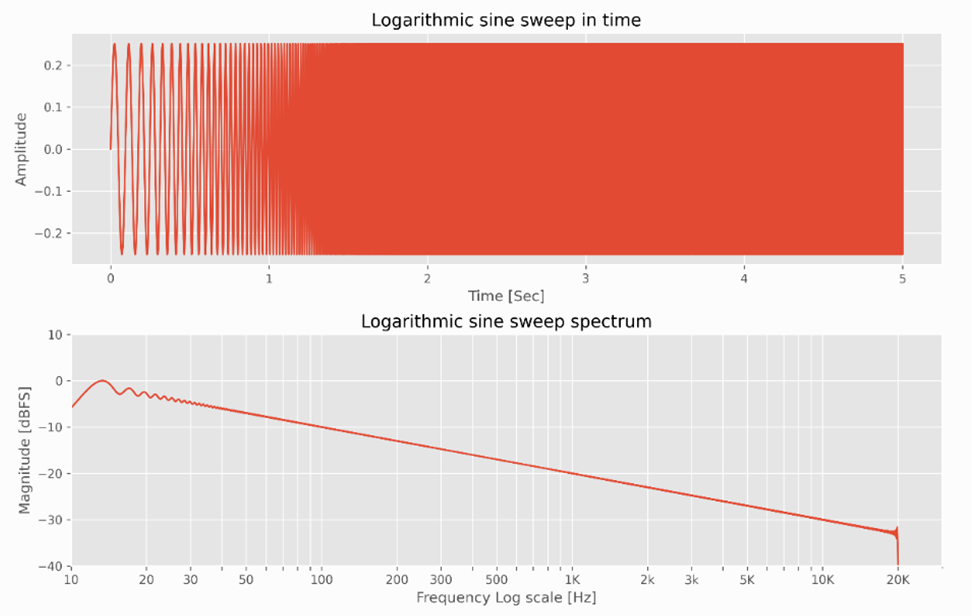

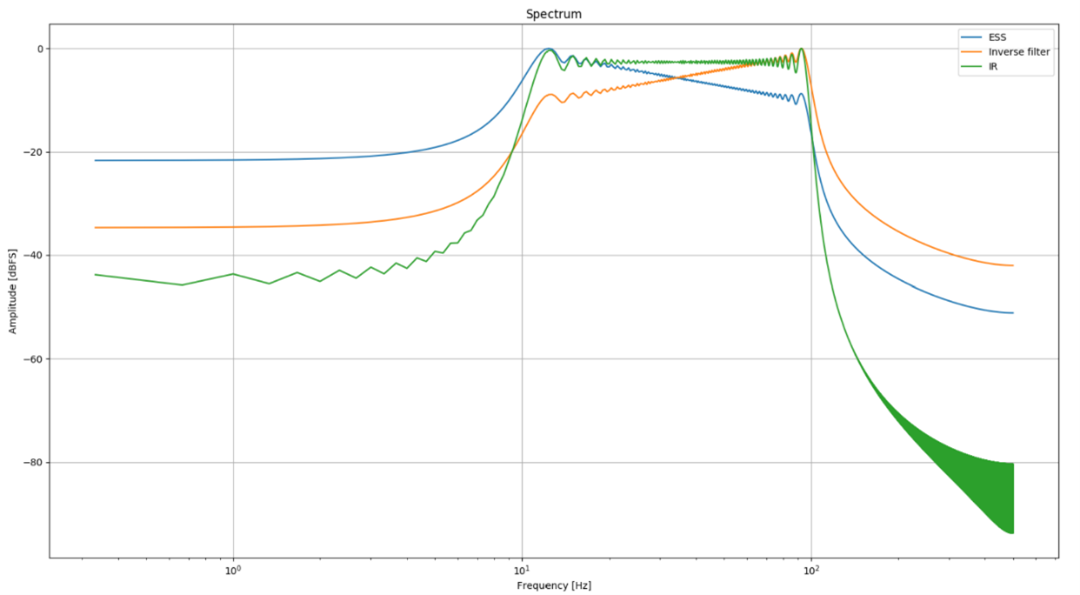

ESS signal

The Exponential Sweep Sine (ESS) signal used in acoustic testing offers several advantages, including test speed and the avoidance of distortion in the measurement range. Unlike older methods that involved recording loud sounds like gunfire or balloon explosions to capture room characteristics, the ESS signal is designed to provide cleaner and more controlled data without distortion.

The ESS signal can be mathematically defined by the following formula:

\[\Large x(t) = \sin(\frac{2 \pi f_{s} T}{R}(e^{\frac{tR}{T}}-1))\]$f_s, f_e$ - Initial and Final frequency of the sweep

$T$ - Duration of a sweep

$R=\ln(\frac{f_e}{f_s})$ - sweep rate

Figure 6-2:ESS

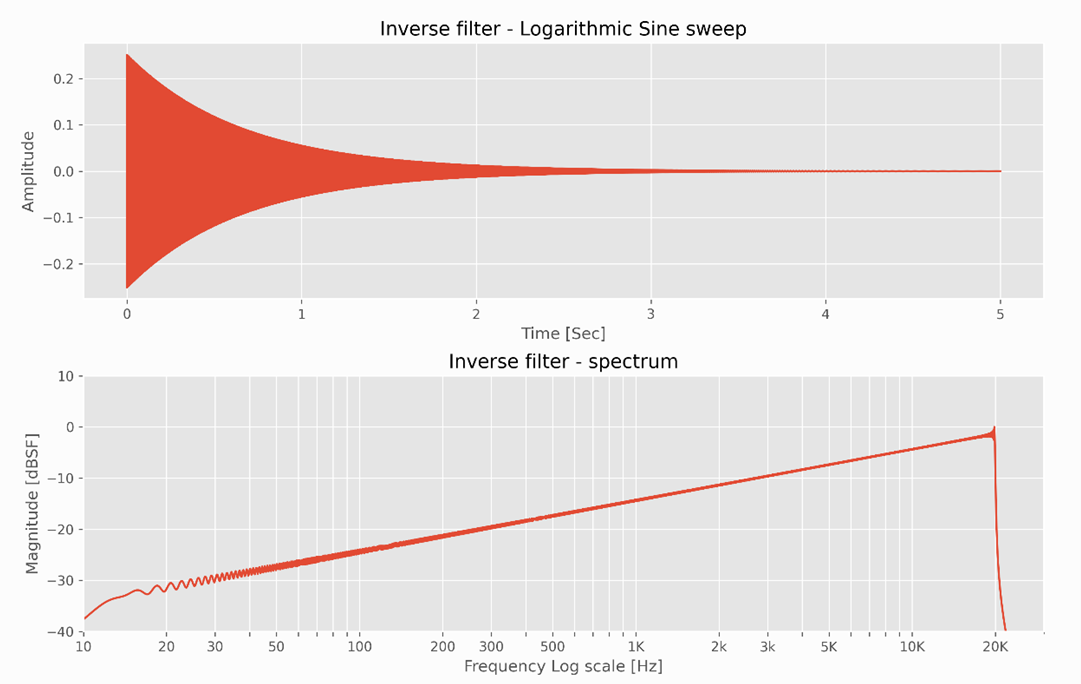

The inverse filter

The inverse filter calculation involves scaling the time-reversed Exponential Sweep Sine (ESS) signal, resulting in an exponentially decaying sweep. This process is often used in acoustic testing and room analysis to obtain information about the room’s impulse response or characteristics.

By taking the time-reversed ESS signal and scaling it appropriately, you essentially create a signal that represents how the room responds to an impulse or sound source. This can be useful for various applications, including measuring reverberation time, identifying room modes, and assessing acoustic properties.

The resulting exponentially decaying sweep signal can provide insights into how sound energy dissipates within the room over time, helping to analyze the room’s acoustics and make informed decisions for improvements or adjustments.

\(\Large f(t) = \frac{x_{inv}(t)}{e^{\frac{tR}{T}}}\)

Figure 6-4: Inverse filter

Figure 6-4: Inverse filter

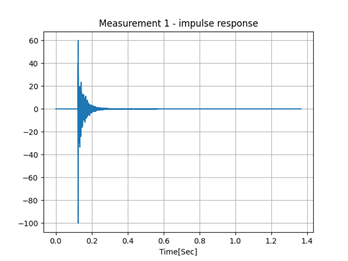

Impulse response

Calculating the room’s acoustic impulse response involves a convolution process between the recorded signal and the inverse filter. This convolution is typically performed in the frequency domain rather than the time domain to optimize computational resources.

In the frequency domain, the recorded signal and the inverse filter are transformed using techniques such as the Fast Fourier Transform (FFT) to convert them from the time domain to the frequency domain. Once in the frequency domain, the convolution operation becomes a simple element-wise multiplication of the transformed signals.

The result of this convolution in the frequency domain is then transformed back to the time domain using an inverse FFT to obtain the room’s impulse response. The impulse response represents how sound behaves in the room over time, including reflections, echoes, and decay characteristics.

Analyzing the room’s impulse response is valuable for various acoustic applications, including assessing reverberation time, speech intelligibility, and overall room acoustics. It provides insights into the room’s behavior with respect to sound, helping to make informed decisions for acoustic treatments or modifications.

Figure 6-5: Transmit function

Figure 6-5: Transmit function

Amplitude to dBFS

Converting amplitude to dBFS (Decibels relative to Full Scale) is a common practice in audio processing and measurement. dBFS is a logarithmic unit used to express the level of a signal relative to the maximum possible digital amplitude in a system.

The formula to convert amplitude to dBFS is:

\[\Large dBFS = 20 \log_{10}(\frac{\vert A \vert}{A_{FS}})\]Where:

- $dBFS$ - is the level in decibels relative to full scale.

- $A$ - Amplitude is the amplitude of the signal you want to convert.

- $A_{FS} = 2^{B-1}-1$ - Full Scale Amplitude represents the maximum possible amplitude in the system.

- $B$ - Bit depth (in our case 24bits)

In most digital audio systems, Full Scale Amplitude is often represented as 1.0 or 0 dBFS, which corresponds to the highest digital amplitude that can be represented without clipping or distortion.

So, if you have an amplitude value, and you want to convert it to dBFS, simply plug it into the formula above along with the Full Scale Amplitude of your system (usually 1.0 or 0 dBFS), and calculate the dBFS value.

dBFS to amplitude

To convert dBFS (Decibels relative to Full Scale) back to amplitude, you can use the following formula:

\[\Large A = 10^{\frac{dBFS}{20}}\cdot A_{FS}\]Using this formula, you can calculate the amplitude of a signal when you know its dBFS value and the Full Scale Amplitude of your system.

For example, if you have a dBFS value of -6 dBFS in a system where the Full Scale Amplitude is 1.0, you can calculate the corresponding amplitude as follows:

$\large A = 10^{\frac{-6}{20}} \cdot 1 = 0.5$

So, in this case, the amplitude is 0.5, which means the signal’s level is half of the maximum possible amplitude in the system.

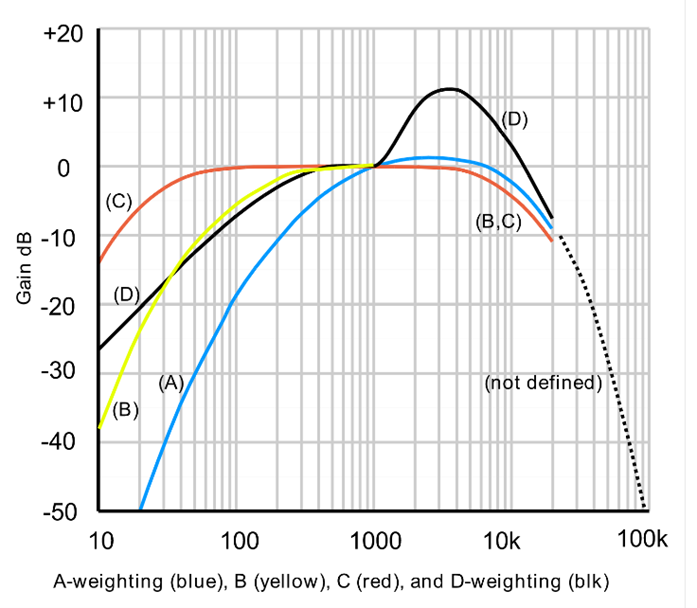

A-weighting

A-weighting is a type of frequency weighting used in acoustic measurements to account for the sensitivity and response of the human ear. The human ear doesn’t hear all frequencies equally; it is more sensitive to certain frequencies than others. A-weighting is designed to approximate the way the human ear responds to different frequencies, giving more weight to frequencies that are more audible to the human ear.

In practice, when performing acoustic measurements, such as measuring noise levels or analyzing audio signals, you often need to apply A-weighting to the measurements to make them more representative of how the human ear perceives sound. This is particularly important when assessing noise exposure or evaluating the impact of noise on human health and comfort.

To apply A-weighting to acoustic measurements, you use a set of filter coefficients or values that correspond to the A-weighting curve. These coefficients are typically provided in standardized tables. You then convolve these coefficients with the measured signal in the frequency domain. This process effectively attenuates or emphasizes different frequency components of the signal to match the human ear’s response.

The result of applying A-weighting is a weighted measurement that better reflects the way humans perceive sound. It is commonly expressed in units like dB(A), which indicates that A-weighting has been applied to the measurement.

In your algorithm, you mentioned that you add a table of A-weighting filter values to the calculated values from measurements in one-third octave bands. This approach allows you to adjust the measurements to account for the A-weighting curve, making them more relevant for assessing how noise or sound is perceived by the human ear.

The A-weighting filter can be applied to a signal in the frequency domain using convolution with the A-weighting curve. Here’s a simplified formula for applying A-weighting to a signal:

Let X(f) be the spectrum of the signal in the frequency domain (i.e., the Fourier transform of the signal), and A(f) be the A-weighting curve in the frequency domain. The A-weighted spectrum Y(f) can be calculated as:

\[\Large Y(f) = X(f) * A(f)\]where * represents the convolution operation. This operation effectively applies the A-weighting filter to the signal’s spectrum. The result Y(f) will contain the A-weighted representation of the signal’s frequency components.

The A-weighting curve, A(f), is typically provided in standardized tables or as a mathematical function that describes the attenuation or amplification factor for each frequency bin or octave band. You’ll need to apply this filter to each frequency component in the signal’s spectrum.

Please note that in practice, the A-weighting curve is defined in standards such as ANSI S1.4, and you may need to use the specific coefficients provided in those standards to accurately apply A-weighting to your measurements. The actual A-weighting curve is more complex than a simple mathematical formula, as it involves a series of specific frequency-dependent coefficients.

Logarithmic Average

The formula for calculating a logarithmic average is as follows:

\[\Large L_{average} = 10^{\frac{1}{N} \displaystyle\sum\limits_{i=1}^{N} log_{10}(x_i)}\]Where:

$L_{average}$ - Logarithmic Average is the resulting logarithmic average value.

$N$ - is the total number of values in the dataset.

$x_i$ - individual value in the dataset.

This formula is useful when you want to compute an average that considers the magnitude of values and gives equal weight to differences across orders of magnitude. It is commonly used in various fields, including environmental science, finance, and audio engineering.

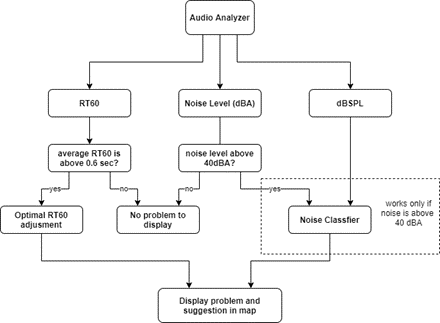

From Acoustic Measurements to Treatment Solutions

In this section, we delve into the process of translating acoustic measurement data into practical treatment solutions for optimizing room acoustics. The objective is to enhance the acoustic environment by addressing noise levels (dBA and dBSPL) and reverberation time (RT60).

Measurement Outputs:

Our algorithm generates three primary output measurements: RT60, dBA noise levels, and dBSPL noise levels. These measurements serve as the foundation for evaluating and improving room acoustics.

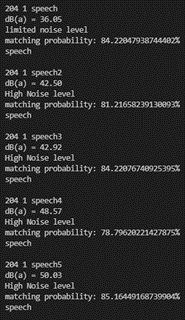

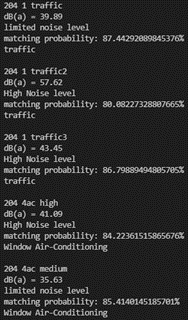

Loudness Test (dBA and dBSPL):

We commence the analysis with a loudness test, focusing on the dBA noise level, which provides a comprehensive assessment of overall noise within the room. If the recorded dBA noise level falls below the 40 dB threshold, the room is considered to have a low noise level and may not require additional acoustic treatment.

However, if the dBA noise level surpasses 40 dB, our algorithm proceeds to classify the noise into one of three common categories: traffic, speech, or air conditioning (HVAC). To perform this classification, we employ a comparison methodology that matches the acquired noise band pattern against a database of predefined patterns stored on the stationary unit (SU).

Noise Classification:

The classification process relies on histogram comparisons facilitated by the OpenCV library. This allows us to correlate the two noise band patterns, ultimately yielding a matching score that ranges from 0 to 1. A higher score indicates a stronger match between the measured data and the patterns in our database.

Upon conducting these comparisons with all available patterns, the algorithm identifies the dominant noise source. For each recognized noise pattern, our algorithm provides a set of associated problems and corresponding solutions. These results are presented to the user through an intuitive graphical user interface (GUI).

Reverberation Time (RT60) Analysis:

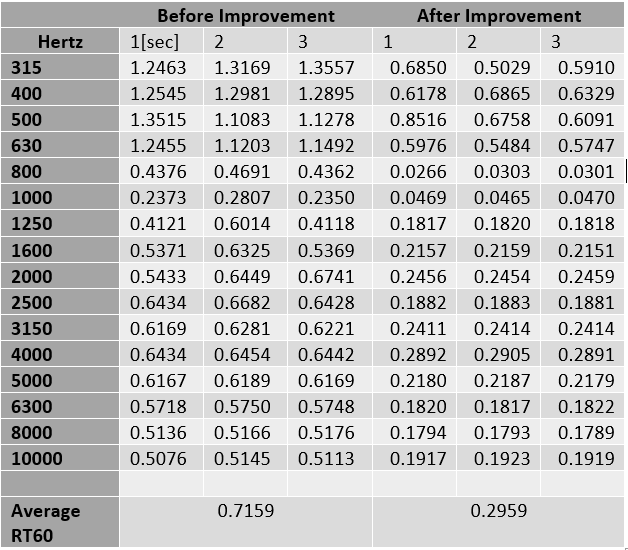

In the next stage of our algorithm, we assess the mean reverberation time (RT60) within the room. Initially, we set a threshold of 0.6 seconds as an indicator of high RT60.

Our RT60 calculations leverage the Sabine formula, incorporating sound absorption coefficients (α) sourced from our extensive database. Additional parameters include room dimensions obtained through measurements and the total area of acoustic treatment material in square meters (m²).

Similar to the noise classification process, we employ a correlation approach for RT60, aiming for lower matching scores to denote better results. The algorithm continues to recommend appropriate quantities of acoustic treatment material until the average RT60 falls below the 0.6-second threshold. These recommendations are presented to the user within the solution screen of the GUI.

In essence, this section elucidates the transformative journey from acoustic measurements to actionable solutions for optimizing room acoustics. Our algorithm addresses both noise levels and reverberation time, guiding users to achieve an improved acoustic environment in their space.

Navigation: Bridging Measurements and Movement

Background

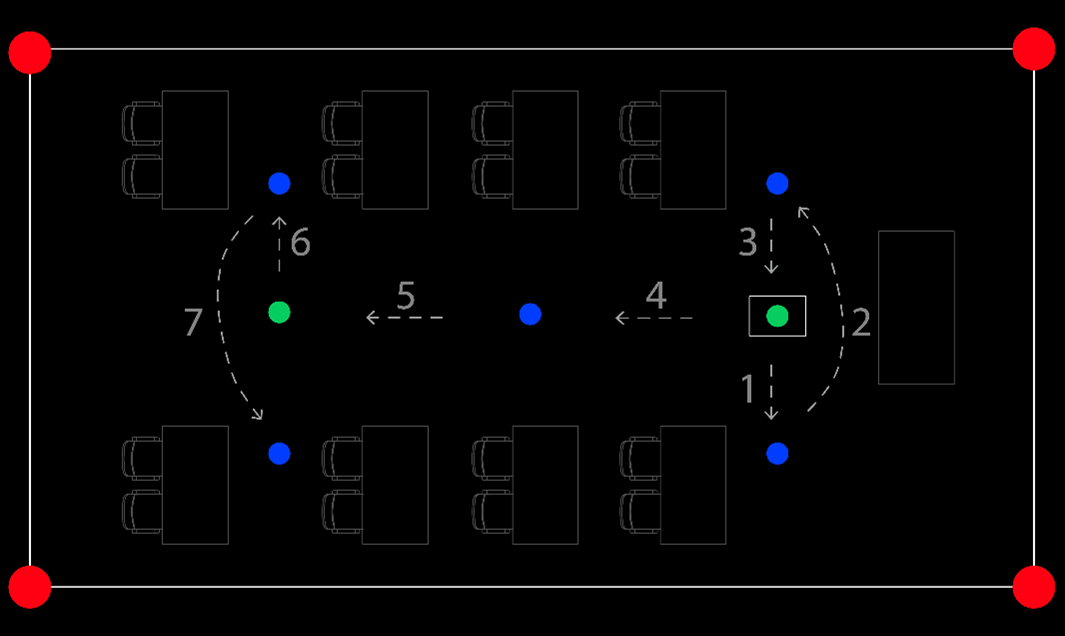

The navigation process is a crucial component that seamlessly integrates acoustic measurements obtained from the microphone and height measurements of the ceiling. The journey begins with the robot positioned at the lecturer’s desk, facing the board, depicted as the green square point in the figure. Utilizing Lidar scans, the robot identifies various points of interest within the room:

- Red Dots: These denote the corners of the room, crucial landmarks for spatial orientation.

- Blue Dots: These represent measurement points where acoustic data and height measurements are collected.

- Green Dots: These serve as navigation aid points, guiding the robot’s path.

As the robot embarks on its navigation, it follows a predetermined path indicated by arrows. The complete route is illustrated in the figure. During initial tests, it was discovered that the robot’s hardware configuration couldn’t execute the entire route in a single scan. Cumulative errors accumulated, limiting the robot’s ability to navigate beyond two points of interest. To mitigate this challenge, additional hardware, such as an accelerometer, was considered. However, the chosen solution involves working with pre-defined trajectories and performing Lidar scans before each point-to-point movement.

With this approach, the robot continuously updates its knowledge of its next destination based on a new scan performed at each stage of navigation. Once the robot completes its entire trajectory, it collates all the data collected throughout the journey, including ceiling height measurements and room area calculations. This comprehensive dataset, along with the recordings acquired at each measurement point, is transmitted to the stationary unit for further processing and decoding.

In summary, the navigation process bridges the gap between data acquisition through measurements and the robot’s movement within the room. It ensures efficient and accurate spatial exploration, enhancing the overall functionality of the system.

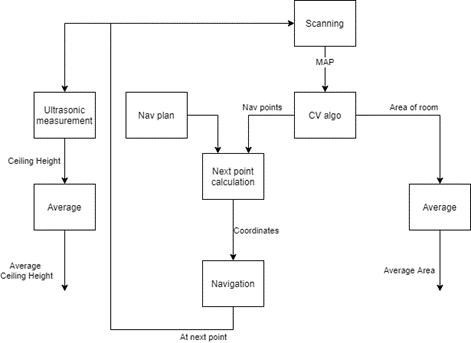

Measurement Process: Integrating Data Collection

The measurement process encompasses various essential components that harmoniously contribute to the overall functionality of the system:

-

Scanning and Mapping: The robot initiates the process by scanning its surroundings using Lidar technology. These scans are then utilized in conjunction with computer vision algorithms to create a detailed map of the environment.

-

Identifying Points of Interest: Computer vision algorithms are employed to identify and mark specific points of interest within the room. These points could include corners of the room, where walls meet, and other significant landmarks.

-

Determining Room Area: Using the acquired data, the system calculates the area of the room, providing valuable spatial information that aids in navigation and acoustic analysis.

-

Ceiling Height Measurements: Height measurements of the room’s ceiling are obtained through ultrasonic sensors. These measurements are performed at multiple locations within the room, ensuring accuracy. To account for any hardware variability, the system averages these height measurements.

-

Microphone Recordings: During each iteration of the measurement process, the robot records acoustic data using the microphone. These recordings are timestamped and associated with the specific point at which they were collected.

-

Data Transmission: After the measurement process is completed, all the collected data, including Lidar scans, identified points of interest, room area calculations, height measurements, and microphone recordings, are transmitted to the stationary unit.

This comprehensive data collection and integration process are fundamental to the system’s ability to perform accurate acoustic measurements and navigate its environment effectively. The combination of sensor data, computer vision, and acoustic recordings ensures a holistic understanding of the room and its acoustic properties.

Figure 6-7: Measurement process

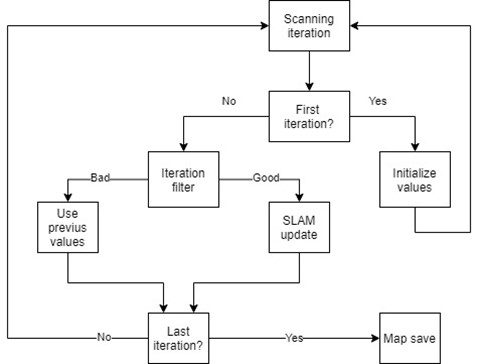

Scanning

The scanning process is conducted through iterative measurements obtained from the Lidar sensor. In each iteration, data is collected as the Lidar completes one full rotation. The frequency of data reception is determined by the rotational speed of the Lidar. The received data, comprising angle and distance information, undergoes verification to ensure measurement quality. If the data is deemed reliable, the SLAM (Simultaneous Localization and Mapping) algorithm updates the map.

Figure 6-8: Scanning process

To create an accurate representation of the environment, the robot performs a sufficient number of iterations. In this system, approximately 100 iterations are necessary to generate a precise spatial map. Once the required iterations are complete, the most recent map is saved as a .png image.

Figure 6-9: SLAM map

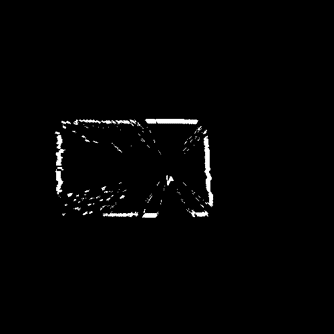

The output of the SLAM algorithm is represented as a grayscale image. In this representation, white areas signify open spaces without obstacles, black areas denote obstacles, and gray regions indicate unscanned or unknown areas. The size of the image, measured in pixels, can be configured to control resolution. Higher resolution requires more computational resources and time. Given the system’s constraints, a practical image size is set at 500x500 pixels.

The Lidar’s location within the image remains consistent across scans, always positioned at the center. In this setup, the Lidar’s location after each scan is defined as (250, 250) on the image grid.

This scanning process and SLAM algorithm output generation enable the robot to construct an accurate spatial map of its surroundings, facilitating effective navigation and acoustic measurements.

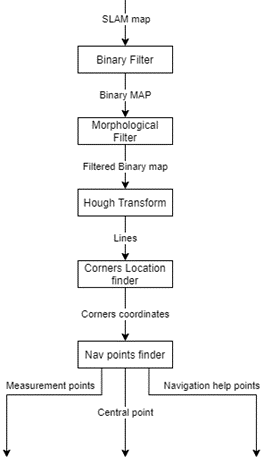

Computer vision algorithms

To determine the optimal points for navigating in space, it’s crucial to identify easily locatable reference points across various scans, locations, and robot angles. Our initial focus is on the walls, as their relative positions remain consistent between scans. We achieve this by employing computer vision algorithms, primarily leveraging the OpenCV library. This algorithm comprises several key stages:

Figure 6-10: CV processing

- Pre-processing Stage:

- Utilizing a Binary Filter: This stage establishes a threshold to distinguish obstacles from walls. It helps in differentiating what constitutes a wall and what qualifies as an obstacle.

Figure 6-11: binary filter output-

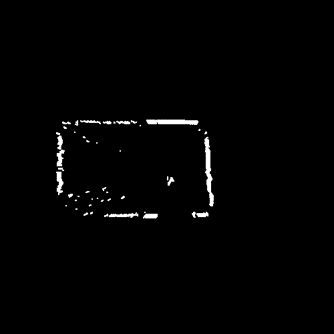

Applying a Morphological Filter: The morphological filter, consisting of dilation and erosion operations, plays a pivotal role. Dilation enhances object visibility and fills small holes, while erosion removes unwanted elements. This step is crucial in removing extraneous features, such as chair legs in the classroom, which align with the scanning direction of the Lidar.

-

It’s worth noting that while the walls may still be imperfect, and there may be white dots on the map, these imperfections do not affect the subsequent stages of the process.

Figure 6-12: Morphological filter outputThe filtered output reveals white areas representing obstacles. Within this output, we can identify lines representing walls and lines that are by-products of chair legs, which align radially with the Lidar’s scanning direction.

-

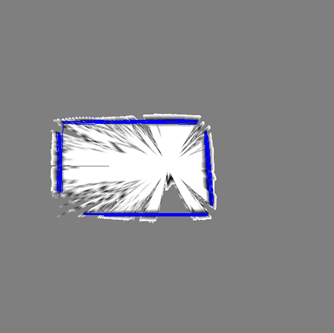

Wall Detection:

- With the map now preprocessed and refined, it is ready for wall detection.

- The Hough transform is employed to identify lines within the image. Notably, the parameters for line detection, including the minimum line length, have been meticulously calibrated in the field.

- The Hough transform yields a list of coordinates, with each row containing four values representing the X and Y coordinates at both the beginning and end of each line. This list may be extensive, particularly when multiple lines or segments contribute to a single wall.

Figure 6-13: Hough transform -

Line Grouping and Intersection Point Calculation:

- The next step involves determining which lines correspond to the walls and subsequently categorizing them into four distinct groups, one group per wall.

- To calculate the intersection points, a specialized function is employed.

- Initially, the algorithm calculates the angle of each line within the image plane, sorting them into two groups based on their angles. Parallel walls exhibit the same or nearly 180-degree phase angles, while adjacent walls typically have angles of approximately 90 degrees.

- Once these two sets of lines have been identified based on their angles, the algorithm can determine the intersection points of these lines, which also serve as the corners of the room.

However, it’s important to note that there are some challenges in finding these intersection points. Lines may be oriented at various angles, potentially leading to situations where slope calculations result in infinite values. Additionally, some lines may not intersect but instead have an intersection point further down their length.

def line_intersection(lines1, lines2)Within the navigation module, a specialized function is implemented to calculate interception points using linear algebra. This function is designed to take two lines from different groups as input, with the understanding that each group contains parallel lines. Consequently, when these two lines are provided to the function, it is guaranteed that they will be oriented at approximately 90 degrees to each other.

The primary objective of this function is to determine the precise locations where these two lines intersect, effectively identifying the corners of the room. This calculation relies on the principles of linear algebra and geometry, ensuring accurate and reliable results for navigation purposes.

How the function works:

$i$ - lines in group

lines1

$j$ - lines in grouplines2\(\Large L_i = \begin{bmatrix} x_{i1} \\ y_{i1} \\ x_{i2} \\ y_{i2} \end{bmatrix} L_j = \begin{bmatrix} x_{j1} \\ y_{j1} \\ x_{j2} \\ y_{j2} \end{bmatrix} D = \begin{bmatrix} \begin{vmatrix} x_{i1} & y_{i1} \\ x_{i2} & y_{i2}\end{vmatrix} \\ \\ \begin{vmatrix} x_{i1} & y_{i1} \\ x_{i2} & y_{i2} \end{vmatrix} \end{bmatrix}\) \(\Large X_{diff} = \begin{bmatrix} x_{i1} - x_{i2} \\ x_{j1} - x_{j2} \end{bmatrix} Y_{diff} = \begin{bmatrix} y_{i1} - y_{i2} \\ y_{j1} - y_{j2} \end{bmatrix}\)

\[\Large div = \begin{vmatrix} x_{i1} - x_{i2} & x_{j1} - x_{j2} \\ y_{i1} - y_{i2} & y_{j1} - y_{j2} \end{vmatrix}\]If $\Large div = 0$, lines do not intercept.

\(\Large x_{interception} = \frac{\begin{vmatrix}D^T \\ Y_{diff}^T\end{vmatrix}}{div}\) \(\Large y_{interception} = \frac{\begin{vmatrix}D^T \\ X_{diff}^T\end{vmatrix}}{div}\)

-

Corner Identification and Room Area Calculation

In the navigation module, we utilize a dedicated function to identify the room’s corners by calculating interception points. This function systematically processes every possible combination of two lines from different groups, ensuring that each pair forms an angle of approximately 90 degrees. By doing so, we generate a list of interception points.

Subsequently, we categorize these interception points into four distinct groups, each representing one corner of the room. To pinpoint the precise corner location within each group, we calculate the average values of the points. This process is efficiently handled by the ‘find_corners’ function.

With the room’s corner locations determined, we can proceed to calculate the room’s area and identify points of interest for further measurements. It’s essential to conduct acoustic and ceiling height measurements at points that meet specific criteria. We aim to avoid placing the microphone too close to large surfaces such as walls or windows while ensuring that measurements occur at distinct and separated locations.

To achieve this, we divide the room into four rectangular areas. Each of these areas is defined by one corner of the room and the room’s central point, determined by averaging the corner points’ coordinates. Within each rectangle area, we locate a central point by averaging the vertices of the rectangle.

At the room’s central point (indicated in purple), we conduct both noise recording and acoustic tests using a speaker. In the other four corner points, we exclusively record noise. Additionally, we measure the ceiling height at all points.

In the navigation process, two critical waypoints need to be identified: one situated between the two closest measuring points to the board and another positioned in the middle of the two farthest measuring points from the board. Our algorithm efficiently calculates the coordinates of these points regardless of the robot’s angle or location.

To visualize the navigation algorithm, we use a graphical representation. The starting position of the robot is depicted in red, while the next destination is marked in green, providing a clear path for the robot’s movement and navigation objectives.

Figure 6-14: Nav points

Navigation (Drive)

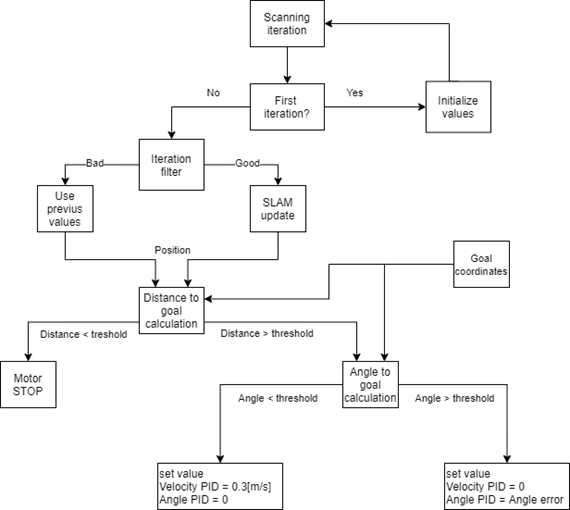

The navigation process closely mirrors the scanning process, with the goal of guiding the robot efficiently to its intended destination.

Here’s an overview of how navigation unfolds:

Figure 6-15: Navigation process

- Initialization and SLAM Integration:

- During navigation, we activate the Lidar sensor to capture data.

- We utilize SLAM (Simultaneous Localization and Mapping) to construct a real-time map for navigation.

- The SLAM system provides us with valuable information about the robot’s position and angle within the environment.

- Goal Monitoring:

- At each scan, a critical check is made to evaluate the robot’s position and angle relative to the intended goal.

- If the robot is deemed close enough to its goal, the navigation process concludes.

- If not, the robot proceeds with navigation, beginning with angle correction.

-

Angle Correction:

- Navigation commences only if the robot’s orientation aligns with the goal direction.

- This angle correction process repeats at each scan, ensuring precise alignment with the desired path.

- PID Controllers:

- The navigation algorithm incorporates two PID controllers: one for angle adjustment and the other for controlling speed.

- Speed is determined by measuring the change in position, while the angle is acquired from the SLAM algorithm.

- The initial tuning of these controllers is conducted under controlled laboratory conditions, followed by additional adjustments through field calibrations.

- Drive Function:

- The motor commands are transmitted through the Drive function, which orchestrates the robot’s movement.

- This function receives three key input variables:

- Power for the left-side motors.

- Power for the right-side motors.

- A minimum value (primarily for debugging purposes and not actively utilized).

- The power values are expressed as percentages, allowing for a range from -100 to 100, with the negative sign indicating movement in the opposite direction.

- These power values are employed to regulate the PWM (Pulse-Width Modulation) signals controlling the motors, enabling precise motor control.

In summary, the navigation process combines Lidar data, SLAM integration, goal monitoring, angle correction, PID controllers for angle and speed, and the Drive function to efficiently guide the robot toward its intended destination, ensuring precise control and adaptability throughout the journey.

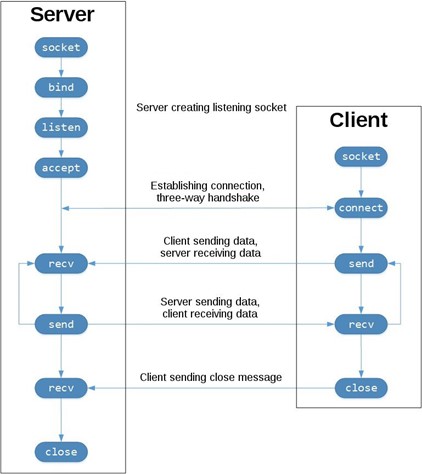

Communication

Python provides a library for low-level networking known as “socket,” which proves invaluable for exchanging data between two Python-running devices. Acoubot employs the TCP (Transmission Control Protocol) as its network protocol for seamless data transfer.

In TCP-based networks, you typically have two key roles: the server and the client. Here’s an overview of how they interact:

-

Server Role (MU - Master Unit):

- The Master Unit (MU) in Acoubot functions as a server.

- In this role, the MU awaits connection requests from clients, such as the Scanning Unit (SU).

- Once a client establishes a connection, data transmission between the server (MU) and the client (SU) begins.

- After the data transfer is completed, the connection is terminated.

-

Client Role (SU - Scanning Unit):

- The Scanning Unit (SU) in Acoubot operates as a client.

- In this capacity, the SU initiates a connection request to the server (MU), which is waiting for incoming connections.

- Upon successful connection, the SU can request various tasks, such as scanning the room, sound measurements, or receiving recorded data.

In summary, Acoubot utilizes the Python socket library and a TCP-based network architecture. The MU acts as a server, while the SU serves as a client, enabling seamless communication and data exchange between the two components of the system.

Figure 6-15: Communication

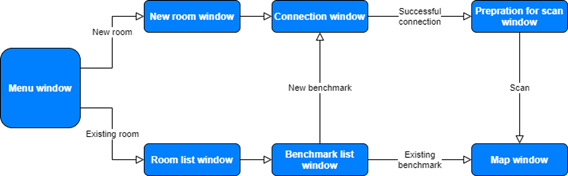

User Interface

Figure 6-16: GUI

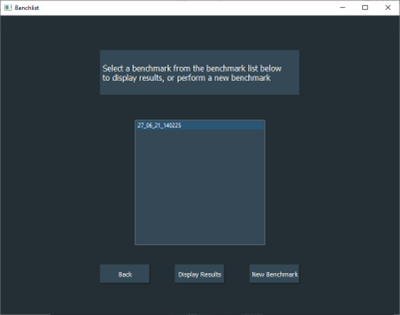

In order to make Acoubot user-friendly, the development of a friendly Graphical User Interface (GUI) was deemed essential. This GUI simplifies the process of initiating a new benchmark and reviewing both ongoing and past benchmarks.

For creating the GUI, we employed PyQt5, which is a framework for building graphical user interfaces. PyQt5 leverages Qt, a versatile widget toolkit that enables the creation of cross-platform applications compatible with various software and hardware platforms, including Linux, Windows, macOS, Android, and even embedded systems. The key advantages of using PyQt5 and Qt include the ability to maintain a consistent codebase while delivering a native application experience with native capabilities and optimal performance. Consequently, the application can function seamlessly on devices such as tablets.

The GUI is designed in a wizard-like flow, with each window providing clear descriptions and simple instructions to guide the user. At the bottom of each window, you’ll find both “continue” and “back” buttons for user convenience.

The integration of the GUI also prompted us to restructure the code into a front-end and back-end architecture, enhancing code organization and maintainability.

Each window in the GUI is encapsulated within a class that encompasses two core functions:

-

setupUi: This function configures the graphical attributes of the window, including parameters such as color, position, font, shape, and buttons. -

retranslateUi: Responsible for handling the text and strings within the window, this function facilitates the localization of the GUI by accommodating multiple languages for the same buttons or text boxes.

Additionally, supplementary functions have been developed to manage user interactions with buttons and other features, ensuring a smooth and user-friendly experience.

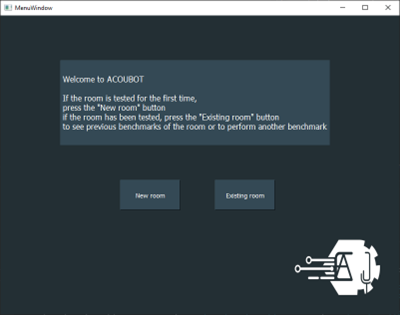

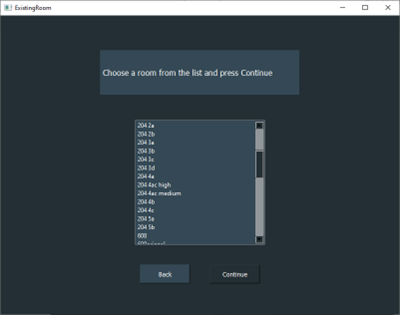

The Home Screen

The Home Screen of the app provides the user with the choice to initiate a new benchmark in a fresh room or access data from a previously benchmarked room. This interface aims to simplify the user’s interaction with the application, offering clear options for their desired action.

Figure 6-17: GUI menu window

Figure 6-17: GUI menu window

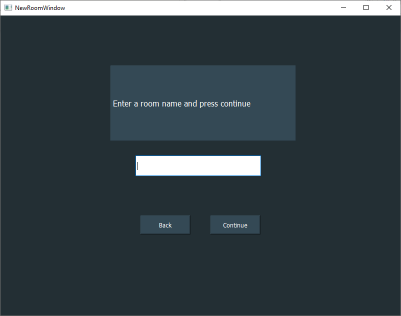

New Room Window

In the “New Room” window, the user can input the name of the room, typically denoting it by a descriptor such as a class number. This information is crucial for organizing and distinguishing benchmarks associated with different rooms.

Figure 6-18: GUI new room window

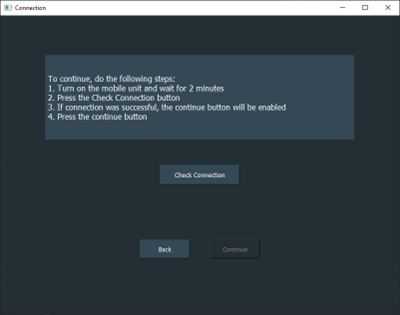

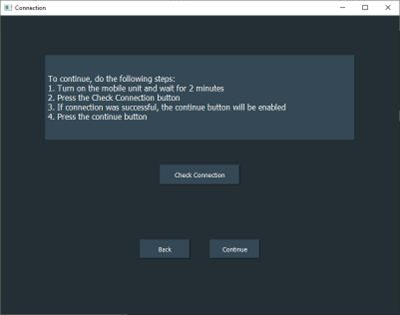

Connection Window

In the “Connection Window,” users can perform a connection check to ensure that the benchmark process proceeds smoothly without any connectivity issues.

Figure 6-19: GUI connection window

The system will verify the connection, and if successful, it will enable the “Continue” button, allowing users to proceed further with the benchmark process. This step helps guarantee that the necessary connections are established before proceeding.

Figure 6-20: GUI connection window 2

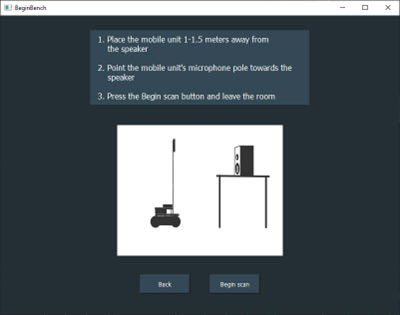

Pre-scan Preparations Window

In the “Pre-scan Preparations” window, users are guided through the necessary steps to prepare for the benchmark scan. Clear instructions and diagrams are provided to illustrate where to place the speaker and the Master Unit (MU) for optimal scanning conditions. This ensures that the benchmarking process is conducted accurately and effectively.

Figure 6-21: GUI begin benchmark

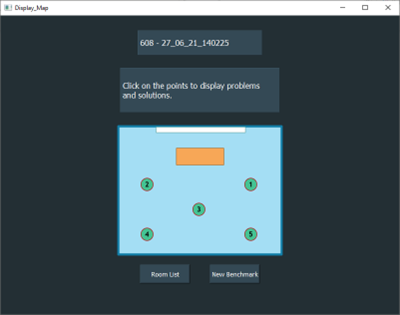

In the “Map Window,” users can access important information related to the benchmark. Here’s a breakdown of its key elements:

Header Information:

At the upper textbox, users can view essential details such as the room number and the date and time of the benchmark. This information provides context for the benchmark data.

Interactive Room Map:

The central focus of this window is an interactive map of the room. The map displays various measuring points strategically placed throughout the room.

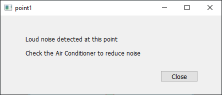

Measuring Points:

Each measuring point on the map is represented as a clickable button. Users can click on these points to access additional information about specific issues or aspects related to that point.

Description Window: